In this blog post, Patrick will tell you about how he initiated and lead usability testing of a new medical DNA analysis system in a young company.

He will share details on how, and what, he has done to test and verify the user-friendliness and learnability of the system in a regulatory environment and what he learned from applicable standards. Peter will also the challenges of deploying a transparent and auditable usability program, using different heuristics, methods and tools and involving various intended users.

Peter will then go on to discuss his experience of working with a diverse team of biologists, researchers, hardware and software engineers, and how collaboration and perseverance lead to appreciated test results making himself, and laboratory staff, enthusiastic about usability testing. These experiences will be of help to you while deploying your own usability testing.

Setting up usability testing for an innovative medical device

The context and the system

A young company is developing a new and revolutionary molecular diagnostics system. The system is to be used to automate analysis of human DNA to check whether a patient has a disease or not, e.g. cancer or MRSA. Currently such analysis are laborious and complex, and are therefore often carried out in batches in central laboratories. Resulting in waiting time and even worse: uncertainty for the patient. The great advantage of this system is that, with a specific cartridge, the research can be conducted with minimal user intervention: “Sample – resulting out” in about 1.5 hours.

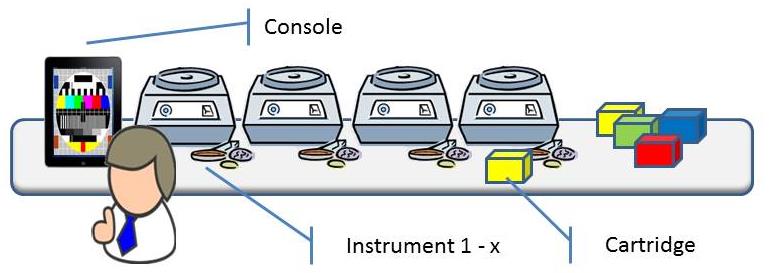

The system consists of a console, one or more instruments and (disposable) cartridges containing specific chemicals. A patient sample is being introduced into the cartridge with tweezers, a pipette or a swab. The cartridge is closed and inserted into an available instrument. The instrument processes the cartridge, analyses the data and concludes the result. The console supports the workflow for creating analysis requests and making the results available.

Risk management & Regulations

Regulatory bodies such as the FDA, consider “user friendliness”, “learnability” and “Error Prevention” as very important. This is why it is important to comply to the ISO 62366: a standard for application of usability engineering to medical devices. If you comply to this standard, there is less to explain to FDA.

From the beginning of the development of the system attention was paid to usability but the company was still searching for a suitable “usability” test approach. Therefore, as test architect for Verification, I composed a test approach and guided the implementation and execution.

Important elements from ISO-62366:

- Deployment of risk management in the engineering approach for medical systems is required. Think about active prevention of (user) errors, such as input validation and a robust workflow, or placing the cartridge into the instrument in only one way.

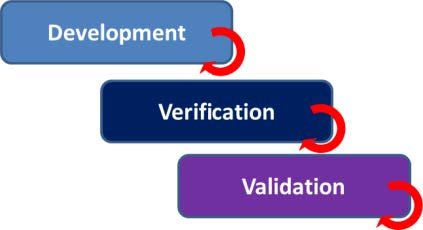

- Iterative development and testing. Evaluation as early as possible by reviews and testing during the design, verification and validation phases of product development (V-model).

Usability Program

Based on the above, the following test approach was recommended:

- Analyse all requirements and mark those that are usability related. This will demonstrate that usability has been taken care of throughout the development.

- Compose a usability test program containing appropriate tests for the various V-model phases (development, verification and validation). Make use of heuristics and methods well-known in the software world, such as Heuristic Evaluation and Software Usability Measurement Inventory (SUMI).

The usability test programme consisted of:

The usability test programme consisted of:

- Reviews and testing with internal users during the development phase.

- Usability testing and a survey with external users in the company laboratory during the verification phase.

- Observations and a survey with external users in their own workplace during the (clinical) validation.

Deployment and execution

The analysis of the requirements was a relatively easy step: all system requirements were analysed and marked if a usability aspect was recognizable. During regular verification tests these requirements were verified and proved that the system meets these usability aspects.

For the implementation of the usability testing, we assembled a team with a project manager, a biological laboratory representative and myself as test architect.

Available ‘use cases’ formed an important basis for our usability testing. However these use cases were not yet complete, so we tackled this first. In a workshop the system life cycle stages were defined: from installation, use, service, up to removal from the laboratory. We then revised the use cases for each stage and provided details such as the type of user, knowledge level, and frequency of use.

In parallel, during development the first exploratory usability tests were conducted. Charters have been prepared for some common use cases and for issues on which fast feedback was needed: insertion and cutting of a swab within the cartridge and insertion of the cartridge into the instrument. These exploratory tests were very effective and efficient: within a few hours we got a lot of feedback! “For the record” and for further analysis, we made notes and video recordings.

By this, the usability test program was “put on the map”. Collaboration between developers and testers was boosted as well as the enthusiasm of management and the usability team.

Reviews

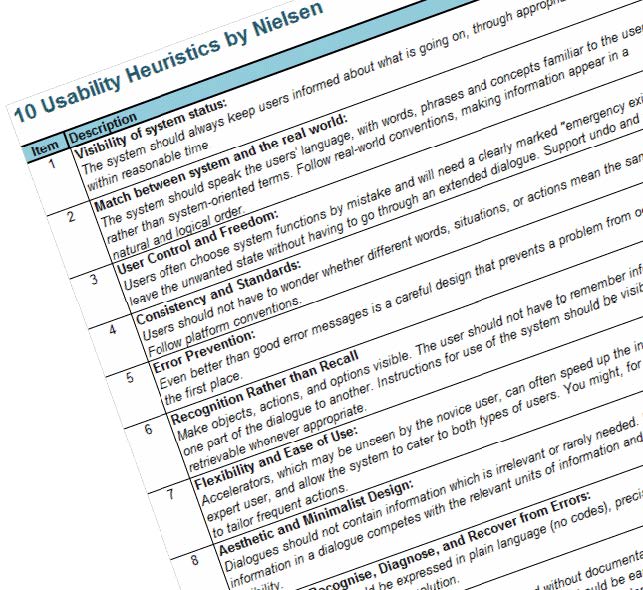

Although late, in parallel with these tests, usability reviews have been conducted in the development phase, using the 10 heuristics of Nielsen. Despite careful preparation, including an explanatory kick-off, the review started slowly. One of the arguments: “but we have already reviewed?”. True, but never with a focus on usability aspects. Based on the first usability testing results and after repeated explanation of the heuristics, finally feedback was obtained. Several review findings were also found during our exploratory testing. So, a missed opportunity to find and fix the issues earlier, e.g. before testing. For me a good reason to focus more on early (heuristic) reviewing in my next project.

Although late, in parallel with these tests, usability reviews have been conducted in the development phase, using the 10 heuristics of Nielsen. Despite careful preparation, including an explanatory kick-off, the review started slowly. One of the arguments: “but we have already reviewed?”. True, but never with a focus on usability aspects. Based on the first usability testing results and after repeated explanation of the heuristics, finally feedback was obtained. Several review findings were also found during our exploratory testing. So, a missed opportunity to find and fix the issues earlier, e.g. before testing. For me a good reason to focus more on early (heuristic) reviewing in my next project.

Test Specification

For the usability tests with real laboratory user during the verification phase, we needed test specifications. Scenarios are based on the use cases and were grouped by user type (novice users, experienced users, administrators) taking into account the basic, alternative and exception paths as well as the frequency of use. The scenarios (test protocols) only had a limited level of detail. If the user can independently work with the system without further explanation, this would show “learnability”.

Test sessions

The next important step was to find and invite (external) representative users for the test sessions. Along with the marketing department users were found with different knowledge / experience levels, in a variety of ages and from different countries: from Norway to Gibraltar. Keep in mind that curious people willing to participate in your test might be too smart to be representative for your intended user group. However, sometimes you have to deal with what you can get…

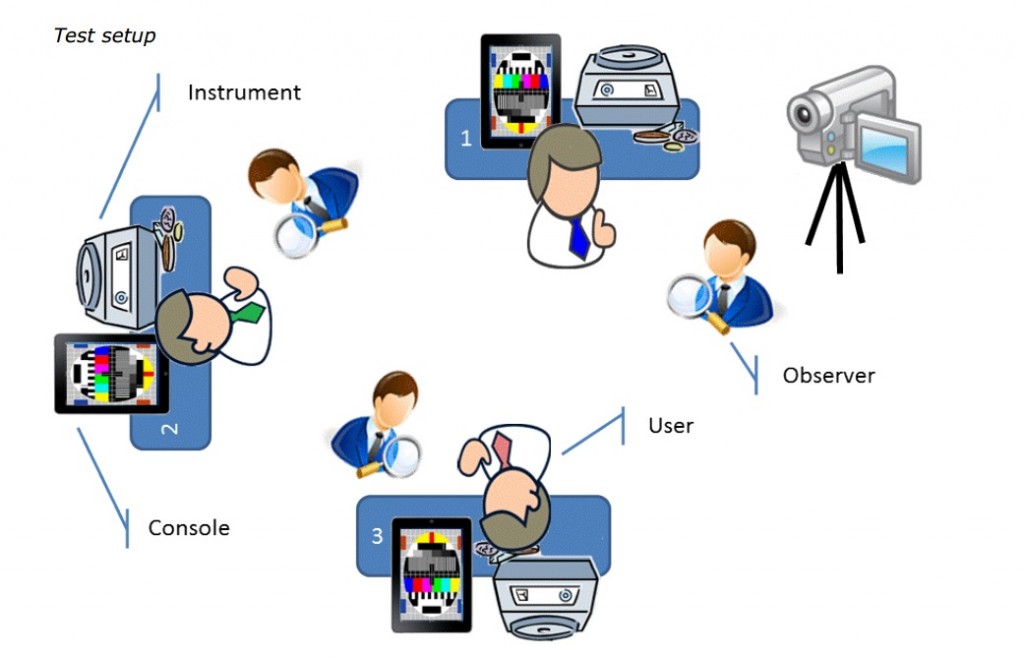

We organized sessions of about 1 to 1,5 day, grouped by knowledge / experience level. After a general introduction to the company, the system and an explanation of the usability testing, our guests were given a short user training of approximately 30 minutes on the system in a laboratory environment. Then it was their turn to execute the test protocols. Per user (max. 3 per session), one observer was present to observe and take notes. In principle, the observer will not assist the user with test execution.

For evidence and for further analysis, again video recordings were made using a visible but strategically placed basic handheld video camera (see picture below).

Everything came together during these sessions. The careful preparations, a user-friendly system, and enthusiastic users! The level of detail of the testing protocols was just right. The users were able to perform their tasks independently and according to plan with minimal instruction and intervention.

After each session, a feedback round was held with our guests starting with filling out a questionnaire. The questionnaire was based on SUMI questionnaire supplemented with similar questions about e.g. the insertion of the patient sample up to presentation of the concluded clinical result. The guests were asked whether they would recommend the system (Net Promoter Score) and what their ‘Top 3’ is of strengths and weaknesses.

Subjective vs. Objective

One aspect of usability is the subjectivity, what cannot be made fully objective. Usability according

to [ISO 9126] is: the capability of the software to be understood learned, used and being attractive Test setup to the user when used under specified conditions. Regularly we had discussions with the developers about subjective topics like ‘attractive’ and ’inconvenient’.

Analysis of the observations and questionnaires confirmed and quantified subjective opinions. This

made them quantitative, helping in the decision to process a usability issue or not.

A test is not complete without…

…an expected result. For the requirements with a usability aspect the expected outcome was simple: the verification should pass. We inspected the verification test reports to verify whether the tests were performed and the results were passed.

The ‘learnability’ requirement was specified as ‘90% of the users must be able to use system after a short training’. After the 30 minutes-course, all of our guests were able to work with the system independently without any problems or aid. Confirmed by questionnaire results, we concluded that this requirement was ‘pass’.

Whether it is “attractive” is to work with the system, was derived from the questionnaires. With a score of over 8 out of 10, this requirement was also considered ‘pass’. Nevertheless, the improvements suggested by the guests will be taken into account during further development.

Conclusion

Engineering (development and testing) of “non-functionals” is subjective and not automatically picked up. Just do it.

Start early with usability reviews. Prepare them well, and they will provide early and cheap feedback.

Use and learn from methods and heuristics from the software world. They form a good basis for setting up usability testing, not only in a regulated environment.

A team that is eager and passionate does not need much to get good results.

And above all, I learned a lot and became even more interested in usability and human factors of users.