It is an empirical fact that code duplication (or non-DRY code) raises technical debt, which in turn causes undesired maintenance issues for software projects especially in your test suites. Additionally, non-DRY code can potentially increase the likelihood of introducing software faults because a fix or change that is applied to one area of the SUT (system under test) might not be applied in another deserving area, simply due to oversight and Murphy’s law.

Automated tests share the same pitfalls and potential problems as project code, since they are, after all, specified by code.

But while refactoring the code in your tests might seem like enough remedy to counteract such potential problems, there is another form of redundancy that is often seen as beneficial for software quality, yet in reality causes the same problems as non-DRY code.

This form of redundancy is called test redundancy. Following intuition, redundant tests are tests that, to some degree, overlap and “test the same thing” with respect to other tests.

Test Redundancy

Indeed, the following question summarises a fundamental problem in software testing: “Does introducing this new test X provide new or more value?”

The value that tests provide can come in many forms. For instance, new tests can add value by detecting previously unknown (but perhaps predicted or recently introduced) defects; or they can add value by showing that a certain code change actually fixes a bug.

It might be tempting to believe that redundant tests are easy to spot, as there are certainly tests that are exact duplicates of others which are clearly seen as providing no value. However, since there are many ways to test a given area of a SUT (with many of those ways being equivalent) spotting such redundant tests is not a trivial task.

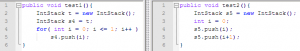

For example, take a look at the following two tests.

You might have easily determined whether the two tests above were redundant, yet it most certainly took some thinking. Now consider the following two tests, which are written in natural language (English) but whose steps can easily be automated to simulate the intended actions:

Are the above two tests redundant? It is certainly hard to tell without knowing their intent or performing further detailed analysis.

In practice, the current beliefs in software development and testing is that test redundancy is either 1) a harmless artifact of software testing with zero cost, or 2) that it can provide a kind of “safety net” for the quality of target systems.

On the one hand, the belief is that if redundant tests provide no value, they certainly do not negatively affect the quality of their SUT. On the other hand, if it is sensed that they somehow add some value, the belief is that having a set of mutiple overlapping tests that exercise the same area is better than just one, and, therefore, that they cannot possibly hurt the quality of their SUT (since having one test that exercises an area of their SUT is a good base case, anyway).

Surprisingly, research has found that this is not the case in reality.

Ortask did a study of more than 50 test suites spanning 10 popular open source projects and made the following discoveries:

- The average test suite has 25% redundant tests;

- Test redundancy is positively linked to bugs in their SUT;

- Test redundancy has a strong negative correlation with code coverage;

- Test redundancy is a useful metric for measuring how smelly automated tests are

Let’s discuss each of the findings.

Finding 1: The average Test Suite has 25% redundant tests

The study found that around 1/4 of the tests in typical automated test suites are redundant. Indeed, with a 4.2% margin of error, the study found that 95% of test suites have between 20% and 30% redundancy, with outliers having as much as 60%.

This translates to having test suites containing 20% to 30% zero-value work (in the best case), while having invested non-zero work and time into creating such tests.

This discovery also illuminates an interesting connection with the pesticide paradox, particularly why tests and testing techniques appear to lose value over time when applied to the same SUT. The pesticide paradox is an informal argument that draws an analogy between the hardening of biological organisms to pesticides, and software bugs somehow becoming “immune” to the same tests (or testing approach) over time.

Interestingly, perhaps the fact that an average of 25% of tests are redundant actually supports that analogy; and perhaps redundant tests even exacerbate the effect. Regardless, this presents exciting areas that would benefit from research.

It helps to discuss the next two findings together.

Finding 2 and 3: Test Redundancy is positively linked to bugs in their SUT, and Redundant tests have a strong negative correlation with code coverage.

Related to the first finding, the research found a strong correlation between test redundancy and likelihood of bugs in software. In particular, Finding 2 discovered that the strength of the correlation (the link) between test redundancy and likelihood of bugs was 0.55. Now compare with the correlation between code coverage and likelihood of bugs, which is -0.53.

Now, with respect to Finding 3, the research found that the correlation between code coverage and test redundancy is a very high -0.78.

What these two findings mean is that, while increasing the amount of code coverage tends to descrease the amount of bugs that remain in a SUT in the wild (thus making it a negative correlation of -0.53, as we expect), increasing the amount of test redundancy tends to actually negate that effect, which ends up increasing the likelihood of bugs that are present in their SUT in the wild.

In other words, software projects whose test suites have a higher amount of test redundancy end up somehow reducing the benefits of the code coverage achieved (as evidenced by the strong negative correlation of -0.78) and consequently raise the chance for bugs to remain in the SUT after its release (as indicated by the positive correlation of 0.55).

If this feels counter-intuitive, you are not alone. Yet, this makes sense, because introducing redundant tests into suites certainly does not increase the probability that new or yet untested areas of their SUT will be covered. Bringing back the pesticide paradox for a moment, this is perhaps why it appears as though a SUT becomes immune to its tests: simply because 1/4 of work is done testing areas that have already been tested, on average.

One note of caution: note that these correlations do not imply causation. That is, the correlations do not indicate that test redundancy causes bugs, just like high code coverage does not cause their absence. Rather, test redundancy is a symptom of poor test quality, improper test management and overall technical debt, all of which lead to bugs in software.

This brings us to the last finding.

Finding 4: Test Redundancy is a useful metric for measuring how smelly automated tests are

Practices that are symptomatic of poor quality, improper management and overall technical debt have a distinct name: they are called smells. Smells are also oftentimes called anti-patterns.

In software development, code duplication is a smell for the reasons already mentioned in the beginning of the post. In the area of management, for example, measuring LOC (lines of code) as programmer productivity is an anti-pattern for the simple reason that there is no relationship between LOC and actual quality work.

Likewise, test redundancy is a smell because it signifies deeper potential problems with practices and/or processes that might be diminishing the quality of your work and the SUT. In the very best case, a test redundancy metric signifies how much effort that has gone into automating tests is actually adding zero value. In the worst case, a test redundancy metric can signal deeper serious problems that might reflect in the quality of your SUT when it releases.

As such, measuring the amount of redundancy in test suites becomes a useful practice and a good indicator for gauging the health of your test suites. This allows you to get a different kind of reading from your tests, a reading that could potentially tell you whether your tests or your practices might be hurting your SUT’s quality.

To read the complete results of the research, head to the Ortask website.

Find More on Test Suites and on Test Redundancy in our Resources Area