Increased transparency and the need to drive quantitative business outcomes with a “right the first time” expectation are key drivers for the transition to agile methodologies. This transition demands a paradigm shift in testing and necessitates nimble processes to be coupled with right tools, people and technology. Added to this is the issue of distributed teams, a given in the Testing services scenario. To aid this transition, a best practice, is to build innovations and deliver testing services with pre-built assets and out of the box solutions. In this blog post, you will learn first-hand the critical factors in transitioning to agile methodologies. We will also highlight real world experience in agile testing along with assets on various platforms to aid global delivery in a distributed environment. Participants will learn following:

- Deep dive into an agile test assessment framework to aid transition to agile testing

- Walk through various models and best practices for testing in a distributed environment

- Explore an early defect detection framework to create testable requirements from user stories

- Learn about estimation practices to deliver agile testing services

`Increased transparency and the need to drive quantitative business outcomes with a “right the first time” expectation are key drivers for the transition to agile methodologies. This transition demands a paradigm shift in testing and necessitates nimble processes to be coupled with right tools, people and technology. Added to this is the issue of distributed teams, a given in the industry today scenario. “How do I estimate”, “How do I plan”, “How should my team be structured “, “Can I use an outsourced model” are all key questions in a test manager’s mind. Mentioned below are some of my learnings that will aid a test manager to execute agile test projects in a distributed model.

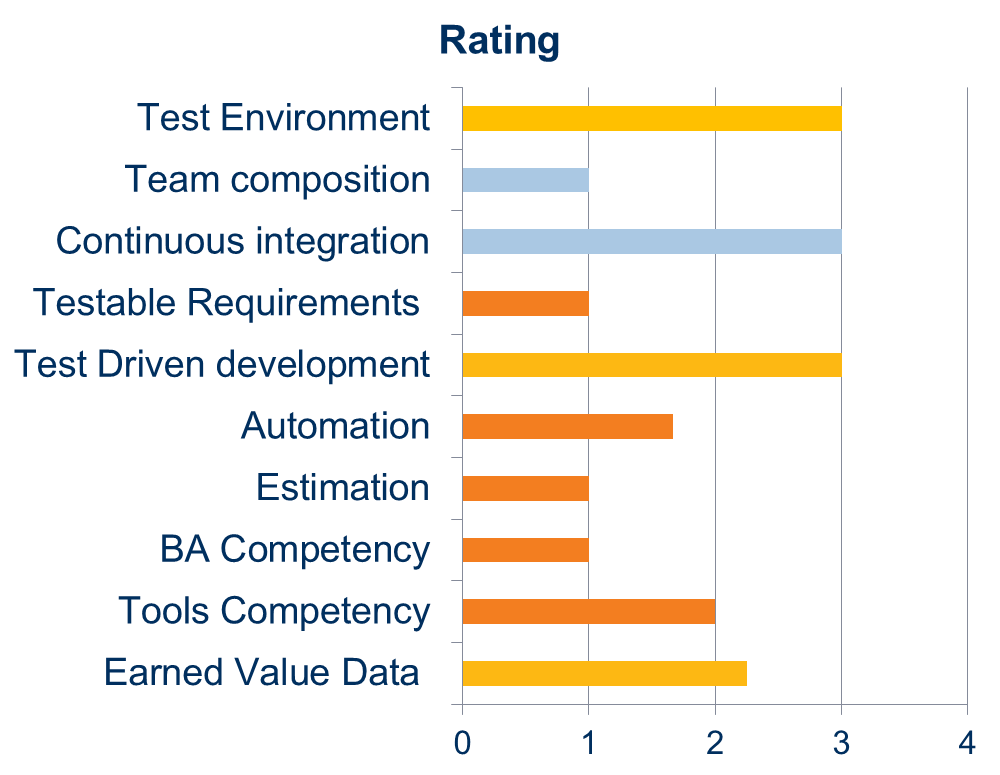

- Learning 1: Quantifying ‘discipline’ in the testing process is key. One mechanism to incubate this discipline is to build a custom test assessment framework (such as the one indicated below) comprising of test parameters, guidelines and evaluation criteria. Keep in mind that this should be a balanced and customizable framework comprising of parameters related to planning, execution, estimation and governing a testing project in a global delivery model. The relevance of the selected parameters should be taken into consideration based on type, nature and delivery goals of the project and/or business unit. The learnings from this assessment should be used to build a roadmap.

– 0 indicates present practice does not exist

– 1: indicates present practice exists but not defined

– 2: indicates present practice exists and defined

– 3: indicates present practice is optimized

- Learning 2: Understand the difference between scrum commitment and test estimates. This, to my experience, is the biggest challenge for the testing team. To aid this, create a custom test estimation model by technology, domain and nature of in-scope activities. Use a combination of top down (activity break down) and bottom up (collation of in-scope work) approaches. Some of the important considerations during effort estimation include (but not limited to) – Estimation for regression testing between sprints; factoring for automation and nonfunctional test types; planning for risk based test approaches (critical to meet target dates).

- Learning 3: Build a multi skilled team. Very often, agile tester job descriptions have a plethora of skillsets which may not be available in one individual. Build a team comprised of multiple skill sets such as technical tester, business oriented tester, performance tester and automation engineer. Depending upon the scheduling of Agile projects and related activities, this team can then be leveraged across multiple projects .

- Learning 4: Learn the art of activity slicing. This concept works very well for a Testing Center of Excellence catering to multiple projects. In this technique, estimation of test effort is done at an aggregate level by slicing activities across multiple projects while the team location is defined based on the activity. Mentioned below is an example:

| Activities | EUROPE | INDIA |

| Sprint planning | X | |

| Feature Stories | X | |

| Automation | X | |

| Architecture Meetings | X | |

| Testing Workshops | X | |

| Test Execution | X | |

| Test Case Creation | X | |

| Team Composition | 50% | 50% |

- Learning 5: Pro-active versus reactive automation. Build a culture where automation is planned and implemented during each Story Development, rather than catching up after few sprints or at the point where regression testing effort is colossal. Reusability of test assets is key. Build a Library of pre-built test components. Good examples are libraries for functional scenarios for ERP’s such as SAP , PeopleSoft, Siebel, Oracle, Sales Force and mobile applications. A good test automation framework that instigates component level automation (rather than scenario based) is very important to plan pro-active automation approach.

- Learning 6: Finally, the single biggest problem in communication is the illusion that it has taken place. There are a variety of technology aids and trainings to promote communication, however nothing replaces the human connect. Promote the culture of collaboration. Create opportunities for the teams to work together in the same location for brief periods of time. When project starts, it is wise to have the whole team travel to customer site and work together with onshore team before transitioning to a distributed model .

About The Author

Deepika Mamnani heads the global pre-sales and solutions arm of Quality Assurance and Testing Services group at Hexaware Technologies. In this role she is responsible for devising testing solutions, creating proposals and roadmaps for testing organisations across industry verticals such as Banking and Financial Services, Capital Markets, Travel, Transportation and Retail. Deepika’ s core competency is conducting assessments for testing processes across software development life cycles such as Scrum, XP, and V. Deepika is also an expert at defining transition models and governance structures for testing organisations. In this capacity, she has played advisory roles for several organisations during the implementation of their Testing Centers of Excellence (Coe’s) both in-house and outsourced. Deepika is a CSTE, is CSQA Certified and is a Certified Scrum Master.

Deepika Mamnani heads the global pre-sales and solutions arm of Quality Assurance and Testing Services group at Hexaware Technologies. In this role she is responsible for devising testing solutions, creating proposals and roadmaps for testing organisations across industry verticals such as Banking and Financial Services, Capital Markets, Travel, Transportation and Retail. Deepika’ s core competency is conducting assessments for testing processes across software development life cycles such as Scrum, XP, and V. Deepika is also an expert at defining transition models and governance structures for testing organisations. In this capacity, she has played advisory roles for several organisations during the implementation of their Testing Centers of Excellence (Coe’s) both in-house and outsourced. Deepika is a CSTE, is CSQA Certified and is a Certified Scrum Master.