Looking at emerging trends; in an era of accelerating digital disruption, 57% of enterprise organizations are using data and analytics to drive strategy and change.

By 2025, IDC predicts that the total amount of digital data created worldwide will rise to 163 zettabytes, ballooned by the growing number of devices and sensors. —IDC Data Age 2025

With the customer-centric business landscape, the focus of most of the enterprises is on emerging technologies such as artificial intelligence, embedded connectivity, internet of things, hyperconnected cloud solutions. However, the base of any of the technology trend is Data and to achieve the art of quality for application based on technology trend, one need to think about changing the way data is tested earlier.

Nowadays there is a saying “Data is new oil” or “Data is power”; it very crucial to have high-quality accuracy as these data not only help to take a business decision and driving strategy but also, core input to emerging technology-based applications. Here comes, the role of verification and validation of data, the approach for testing data. In software quality, verifying the integrity of data is important and without changing the way we test data it is impossible to cater to the current need of data validation for operations, integration, backend, collected data and different forms of data.

Challenges

Earlier applications had a small data set, which is structured, and one database can suffice and restricted to application needs. Some data created by the tester for testing and verifying the application. Now, data are of large-scale combinations of structured and unstructured, the probability of determining the next data set is very difficult. A shift from traditional testing to AI testing

- AI applications are non-deterministic and probabilistic

- Dependency on humungous amounts of data

- Difficult to predict all scenarios

- Continuous learning from past behavior

- There is no defined input/output

- Continuous monitoring and update is required

Approach

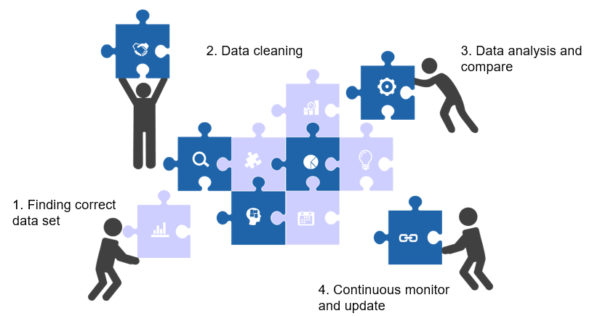

With the test data automation, a data-driven approach to resolves data quality issues and constantly validating and verify the input data in a stream of data. Approach to resolve most of the data test challenges is breaking the data testing activities into four major components

a. Finding the correct set of data

This step is the most difficult and once the tester identifies the correct data set, testing is half done. The various methods used internally to achieve the accuracy in-built model finding the correct set of data like tokenization, using NLTK, text blob python library, normalization, stemming and lemmatization and rule-based classification.

b. Cleaning data as per required business use case

Cleaning of data is required for higher accuracy without any manipulation. First raw data collected from the correct set of sources and using panda’s python library for data binning/data bucketing and classifying on business rule added in the model.

c. Comparing and analyzing the data

Validating collected, consumed data for given use cases with different automatic test data generation.

d. Continuous Monitoring and update

Integrating with existing automation suite Jenkins pipeline for continuous testing and improvement based on alert and notification added while monitoring.

Benefits

Our solution is beneficial for data quality analysis and heterogeneous data comparison, data anomaly identification and building model with validated data and system integration which is need for the hour to have a robust automation framework. Other business benefits from the data and analytics automation framework can improve the productivity and efficiency of testing and development.

- Reducing effort involved in finding correct data sets and removing false positive

- Faster time-to-market with any of emerging technologies-based application which core value is data

- Reduced 30-60% of data test efforts, lower cost

- Manage high data volumes and scale as per business requirement

- Cover heterogeneous data formats and 100% test coverage for data

- Low code solution for data testing

- In-built algorithm test and user-friendly report

[add promo]

Conclusion

However, the vision of gamechanger and solution-oriented data analysis and modeling inhibited due to lack of clean, correct and structured data in the system. This leads to loss of business forecasting, identifying new opportunities, technical models and algorithms to implement in any emerging technologies. Therefore, the role of quality assurance here is important here with data and big data analysis with manual, automation, real-time optimization and implementation of data quality test as input to any analytical sources.