Every new project brings challenges – in our case turning an online banking application into a multi-tenant and group-wide platform. While the different back-end development teams worked hard to get the new tenants up and running the web front-end developers complained regularly that one or more REST endpoints are not working thereby making their life difficult. Over one morning coffee we discussed how to improve the situation and the following question popped up: “What about having a REST client which walks the REST endpoints in our test environments every morning to see if they work?!”. The back-end team would get some immediate feedback and the front-end developers might have time to reschedule their tasks. How hard can that be?!

Gatling for Testing

There are many ways to invoke REST endpoints but using a performance test tool instead of custom code would bring additional benefits – once you get your test running you get performance testing and reporting for free. Initially we leaned towards Apache JMeter but a while ago we came across the following email [1] stating:

While we have extensive experience with Apache JMeter, Gatling did sound interesting

- Maintaining a complex JMeter setup is not easy

- Scripts written in a Scala DSL would work nicely with IntelliJ providing auto-completion and re-factoring support

We decided to try out Gatling for testing as part of a “side project” – that “not so really important” stuff you do on Fridays and bridging days to stretch your skills and bring back the fun. After a couple of Fridays, the first working iteration of the test suite was finished. Gatling provides a Scala DSL which makes writing the tests straight-forward assuming that you have a Scala-aware IDE (such as IntelliJ) to help you with the syntax and brackets. As Scala newbies we took the opportunity to implement some additional infrastructure code to stretch our skills and improve the maintainability of the test suite.

The code snippet below reads through a user CSV file and executes five REST requests using a single virtual user – if any error happens the overall test fails.

class Test extends ConfigurableSimulation {

val users = scenario("Users")

.repeat(userCsvFeeder.records.length) {

feed(userCsvFeeder)

.exec(

Token.get,

GeorgeConfiguration.get,

GeorgePreferences.get,

GeorgeAccounts.get,

GeorgeTransactions.get

)

}

setUp(users.inject(atOnceUsers(1)))

.protocols(httpConfServer)

.assertions(global.failedRequests.count.is(0))

}In our case the REST endpoint invocation code uses Scala singletons as described in [3]. A HTTP GET request fetches all accounts and a JSON path expression stores the account identifiers in the user’s session:

object GeorgeAccounts {

val get = exec(http("my/accounts")

.get(ConfigurationTool.getGeorgeUrl("/my/accounts"))

.header("Authorization", "bearer ${token}")

.header("X-REQUEST-ID", UUID.randomUUID.toString())

.queryParam("_", System.currentTimeMillis())

.check(

jsonPath("$.collection[*].id")

.ofType[String]

.findAll

.optional

.saveAs("accountIds")))

} The Gatling test was wired with Jenkins to run an early morning test hitting the tenant’s FAT environment. From now on the first thing in the morning was checking the results to discuss problems with backend developers.

Ant Or Not To Ant

While the original question is nearly 400 years old and much more fundamental we tend to wrap invocations of command line tools into Ant scripts. It is always a good idea to

- Encapsulate an impossible to remember command line

- Expose a “standard command line interface” familiar to Maven users

- Add convenience function, e.g. archiving the last test report

Using Gatling out of the box the caller would need to type

gatling.sh –simulation at.beeone.george.test.gatling.simulation.george.at.functional.Test

while Ant allows to settle for a simple

ant test

Welcome To The Matrix

After spreading the word a few people became interested in running Gatling test on their own. One colleague asked

Could we run the same tests for our own REST endpoints in the development and test environments to see if they work?!

Well, we always assumed that Austria’s REST endpoints are in a good shape but this would have been a petty statement so the answer was: “Of course it is possible but we need to add some configuration magic first”.

The added configuration is now referred as The Configuration Matrix defining the four Configuration Dimensions

| Dimension | Description |

|---|---|

| tenant | The tenants (aka countries) provide additional features you need to test |

| application | The application to be simulate, e.g. the web client might provide a different functionality than an iOS app |

| site | Various staging environments are used requiring different REST endpoint URLs |

| scope | Differentiate between a smoke and a much larger functional test |

In order to run tests we need some Configuration Items

| Configuration Item | Description |

|---|---|

| environment.properties | Base URLs for REST endpoints and other configuration data |

| user.csv | User credentials consisting of user name and password |

How can we model these four Configuration Dimensions using Configuration Items to get something more concrete? The Configuration Dimension are modeled as file system folders while the Configuration Items are stored as files which results in the following picture

`-- at

`-- fat

|-- environment.properties

|-- george

| |-- functional

| | `-- environment.properties

| `-- smoketest

| |-- environment.properties

| `-- user.csv

`-- user.csvIn addition, we imprint the following semantics

- environment.properties are merged from the leaf directory upwards where the first property definition wins (aka write-once)

- user.properties are searched from the leaf directory upwards where the first hit wins

By now we defined four Configuration Dimensions, Configuration Items and a strategy to load them. The one thing missing in the setup is the qualified name of the Scala script to be executed. The following convention is used to derive the Scala script name from the given configuration information

at.beeone.george.test.gatling.simulation.${application}.${tenant}.${scope}.Test

Configuration Example

The previous chapter sounded a bit abstract – let’s look at an example to clarify things. Assume that we want to execute a functional test representing George Online Banking hitting Austria’s FAT system. In order to execute the test the following command line would be used

ant -Dtenant=at -Dapplication=george -Dsite=fat -Dscope=functional clean run

The Ant script determines the fully qualified name of Scala class as at.beeone.george.test.gatling.simulation.george.at.rampup.Test. The Gatling script picks up the following configuration items

- The enviroment.properties are merged upwards from

- at/fat/george/functional/environment.properties

- at/fat/george/environment.properties

- The credentials are taken from at/fat/user.csv

Going Functional

A few weeks later we did a large refactoring introducing Data Transfer Objects (DTOs) for all REST endpoints instead of exposing the internal data model. While this was largely a mechanical task aided by the IDE we could accidentally change some part of JSON payload. That becomes an issue when dealing with mobile applications since they are much harder to update. Consequently the next question came up: “What about having a REST client which walks the various REST endpoints every morning to see if the JSON responses have changed?!”

Well, we already have a REST client which walks the various REST endpoints so we just have to capture the JSON responses and compare them with a set of recorded templates. How hard can it be?!

Looking closely at the task at hands shows that the following functionality is required

- Remove some parts from the JSON response, e.g. lastLoginDate

- Pretty-print the JSON response to cater for human-readable comparison

- Save the final JSON response using a meaningful name

- Add a switch to distinguish between functional and performance tests

- Compare between the current and expected responses

We decided to move the JSON handling into a separate project [4] – implementing it in Scala turned out to be harder than expected due to our (well, actually my) limited Scala skills. An example of the updated Scala implementation is shown below:

object GeorgeAccounts {

val get = exec(http("my/accounts")

.get(ConfigurationTool.getGeorgeUrl("/my/accounts"))

.header("Authorization", "bearer ${token}")

.header("X-REQUEST-ID", UUID.randomUUID.toString())

.queryParam("_", System.currentTimeMillis())

.check(

jsonPath("$").ofType[Any].find.saveAs("lastResponse"),

jsonPath("$.collection[*].id")

.ofType[String]

.findAll

.optional

.saveAs("accountIds")

.exec(session => {

val userId: String = session.get("user").as[String]

JsonResponseTool.saveToFile(

session,

"lastResponse",

"george/my/accounts",

userId)

session

})

} Let’s have a closer look what’s happening here

- The Gatling Check API allows to apply a JSON Path expression where the result is saved as lastResponse in the user session

- The current user id is extracted into a userId value

- The JSONResponseTool takes the JSON content, does the pretty-printing and stores the JSON content in the file system

- Internally the JSONResponseTool builds a meaningful file name, e.g. “george-my-transactions-XXXXX.json”

This only leaves recording the expected responses and comparing them with the current JSON response which is actually implemented in the Ant script

ant -Dtenant=at -Dsite=fat -Dapplication=george clean record

ant -Dtenant=at -Dsite=fat -Dapplication=george clean verifyWe intended to run the functional test every morning but it turned out that this approach is too fragile to be of practical use

- The test users are not used exclusively by the Gatling tests but also for regular testing, e.g. a new payment order done by QA changes the JSON response

- Most test environments are setup to import new transactions regularly thereby changing the JSON response for some good reason

- Some of our REST endpoints do not implement strict ordering of list results

Let’s assume that this goes under “Lessons learned”. Having said that, this feature is still useful for verifying a deployment

- Capture a new set of expected responses before the deployment

- Notify everyone to leave the test system alone and deploy the new artifacts

- Compare the current with the expected responses and manually review the changes

- Assuming that everyone is happy send out the good news that the latest and greatest version is out now

Performance Testing

A couple of weeks later a manager asked about “that fancy new test tool” and if they could use it run some initial performance tests hitting a newly setup test environment. Well, we were forcing Gatling to run functional test, so running performance tests should be a breeze. The only challenge would be migrating the whole setup to Virtual Machines where the manager’s development team could run the performance tests on their own.

The answer to this challenge lies in combining existing tools: “The whole is greater than the sum of its parts.”

- Gatling tests are executed on the command line – no GUI required

- The Gatling command line invocation was already encapsulated with an easy to use Ant script

- The Jenkins CI server [5] provides out-of-the box Ant support

- Each performance test scenario was implemented as a Jenkins job triggering the Ant script

- The Jenkins CI server instance was locked down [6] to avoid unauthenticated access

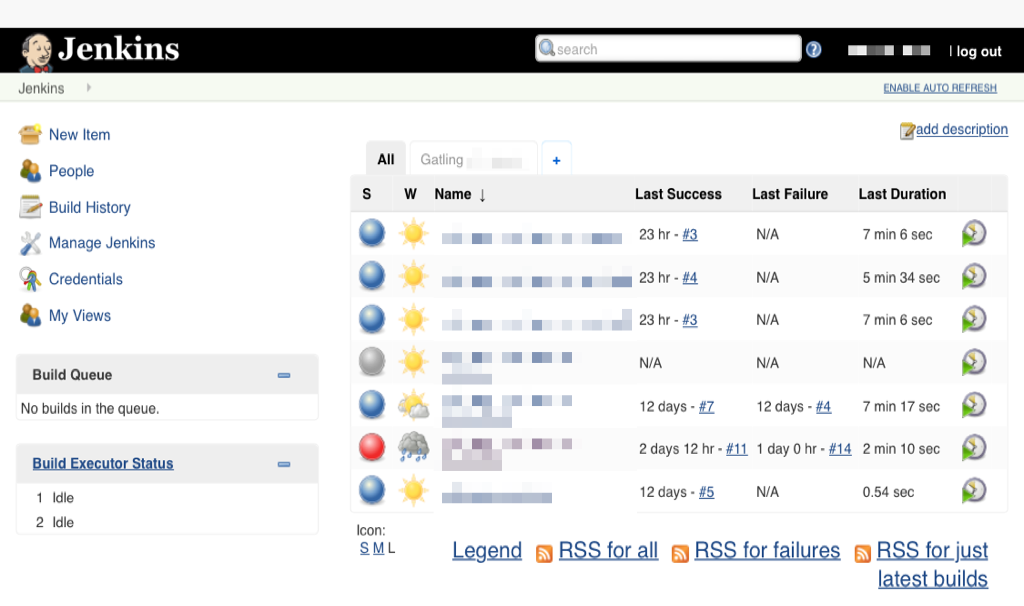

The result of this approach is shown below

Why do we think that the whole is greater than the sum of its part?

- Each authenticated developer can manually start a performance test over the browser

- No need to install proprietary software on a developer’s box

- Jenkin’s Git integration ensures that always the latest version of the test scrips are executed

- No more wasted time in keeping test script synchronized across multiple installations

- Jenkin’s Web UI provided great visibility and a historical view of executed performance tests

- No more wondering how the performance test performed last ago

- Jenkin’s CI server comes with a useful features such as a scheduler and email notifications

- No need to start performance tests manually

- Combining Jenkins scheduler with Gatling’s Assertion API [7] allows Continuous Performance Testing

- No more unnoticed degradation of server response times

Looking At Real Code

Now it’s time to look at real code implement Gatling performance tests

package at.beeone.george.test.gatling.simulation.george.at.rampupload

import at.beeone.george.test.gatling.common.ConfigurableSimulation

import at.beeone.george.test.gatling.simulation.george.at.TenantTestBuilder

import io.gatling.core.Predef._

class Test extends ConfigurableSimulation {

val users = scenario("Rampup Load") {

repeat(getSimulationLoops) {

feed(userCsv.circular.random)

.exec(

TenantTestBuilder.create("performance")

)

}

}

setUp(

users.inject(rampUsers(getSimulationUsers) over getSimulationUsersRampup))

.maxDuration(getSimulationDuration)

.protocols(httpConfServer)

}Please note that is the unaltered Scala code and from a developer’s point of view it is a thing of beauty

- We create a ramp-up scenario iterating over a CSV file containing the user’s credentials

- In order to support multiple performance test scenarios the list of REST calls are re-factored into a

TenantTestBuilder - The configuration handling is completely hidden behind a

ConfigurableSimulationgiving us complete freedom to change the underlying code and functionality

Gatling at Erste Group

After nine months since starting the Gatling “side-project” we can tell a modest success story

- Extending and maintaining the test code is a breeze and currently covers approximately thirty REST endpoints

- The Gatling tests regularly find issues being handled quickly be the backend developer teams

- Other teams are showing interest in using Gatling for their internal performance testing

- The daily testing exercise provides a good visibility for the project manager and team leads

Is Gatling For You?

While we are happy with Gatling and our test infrastructure this question is hard to answer. First and foremost, Gatling is a code-centric tool which requires solid development skills for the initial setup. From a developer’s perspective this approach has a lot of advantages

- The IDE of your choice helps with syntax-highlighting, auto-completion, re-factoring and debugging

- There are no huge XML files which change their content auto-magically on saving which caters for version control and file-compare utilities

- There is Scala code at your fingertips allowing you to add useful functionality

Having said that there is the “Gatling Recorder” to help with recording an initial test scenario but turning the generated output into maintainable Scala code still requires development skill. On the other hand, extending and maintaining a well-written Gatling test suite is easy and can be done by members of any QA team – so there is light at the end of the tunnel if you have access to Scala or interested Java developers.

Looking for help with Test Automation Tools? This post might help.