Home › Forums › Software Testing Discussions › How Does Automation Testing Impact Manual Testing?

- This topic has 16 replies, 14 voices, and was last updated 8 years, 5 months ago by

Ronan Healy.

Ronan Healy.

-

AuthorPosts

-

May 11, 2015 at 10:09 am #8053

Seeing as more and more companies seem to emphasise automation frameworks as a preferred method of testing, and automation tools seem to be more popular by the day, I was wondering, is this affecting the use of manual testing? Have you found that to be the case or not?

May 11, 2015 at 4:44 pm #8070For me I have tried to compliment my manual testing effort by automating some API tests.

I can then run this as a regression suite manually and focus on exploring the edge cases.

We do need to re think our overall automation testing effort though as we suffer from the ice cream cone or more an hourglass shape of the automation testing pyramid ideal.

We feel though that we should integrate actual automation testers to help write the tests but we are still a way of to help us with this, so in the meantime the developers write unit and integration tests which run on a continuous integration server.

May 15, 2015 at 1:51 pm #8130If used skillfully test automation frees testers of boring, repetitive work and it can determine a baseline quality quickly.

So it does effect the use of manual testing in a positive way. Manual testing can be used where it is better (non repitive tests that require thought), automated checking can be used to quickly validate against most requirements.

This is the way I use it anyway.May 18, 2015 at 8:01 pm #8160I’ll describe my experience with 3 cases.

Case 1: Several years ago I worked on a team developing mobile applications for well-known by the time smartphone vendor. One type of automated testing, monkey testing, was a requirement for both app marketplace and customer. We learned from that example that even this simple, random techique of automated testing can bring value by testing application stability, memory consumption etc — and we used this technique for the next few years, developing for differnt platforms. If some build is not stable enough to endure, say, 30 min monkey test, than there may be no reason to start manual testing of it, better fix problem(s) first.

Case 2: A few years ago I worked on other team, developing tablet application for top management. Apart from monkey test similar to Case 1, but this time developed by myself, we also used server API tests made with with Soap UI, because it is better to know that your API doesn’t have regressions than to start manual testing round and to run into some client malfuntioning because of API failure.

Case 3: Even working on the very simple manual scenarios you may want to automate some of them. For example, you are testing a complicated website and you know that backend servers and configuration can fail, or they already failing, and the failure makes user login impossible. If you don’t want to check user login manually every time, you can write simple login authomation script using Python and Selenium or Java and Selenium or Robot Framework and Selenium.

I hope from this 3 cases you can see how authomation of different things (mobile app, server API, website) can benefit you and help to reveal the problems faster or make repetitive task less boring.

May 20, 2015 at 4:04 pm #8198Hi Daragh,

This is a very interesting topic. Manual testing vs Automation was always a good debate.

I have worked on different projects with various ways to define what should be tested manually and what should be automated.

On a backend project that provided resources to other projects everything was tested using automation. It was API testing for SOAP and REST and I have used SoapUI for that. All tests were developed according to the requirements and regression tests were run daily during the night. No manual testing was done..

On other project that developed a web application, the releases were very often, they included many features and specific test cases and everything was tested manually. I was part of an independent team that was responsible for testing each release and validating it. Everything there was tested manually due to the limited number of resources (people and time) and high amount of tasks. After some releases, another team started to automate tests but independent from the project release schedule.

On another project that also developed a web application I have started with test design for manual testing and execute tests manually. After a while I had enough time between releases to automate the regression tests. Now all regression tests are automated and every release I am focused only on the new features, some possible areas that in the past had troubles and display problems (browser specific issues, elements not correctly aligned/displayed etc.). All automated regression tests are running each release after deployment while I have time to check manually the things I have mentioned before.

I think it is very important to decide what can be automated and what cannot be. For sure automation can save a lot of time. But developing the tests can be very challenging and time / effort consuming. It might be good as a tester to switch between manual testing and automation and vice versa from time to time, if the project lifecycle allows it. Automation helps a tester not to be bored with testing and develop programming his/her skills in addition to the ones needed when testing manually.

These are my experiences and ideas about manual testing and automation. I hope you find this information useful

Regards,

AlinMay 27, 2015 at 1:09 pm #8241Automation v Manual.

First point is Automation is checking. Testing is exploratory in nature.

So I don’t think Automation can replace Testing in it’s entirity. Just as a robot will not replace a human. (In our lifetime)

Automation though can augment Manual testing, buy performing repetative/predictable tasks. e.g. Use automation to log as a particulare profile, or change profiles; check a form for content and field validation.

Automation is also great for repetative regression checks.

Automation should not find any major issues, it should be there to check nothing has changed, or deviates from a known state.

Automation frees up the Manual ‘Tester’ to do the interesting and clever tests that there wouldn’t normally be time for. (as long as both the design and automation is stable)My own experiance suggests that automation can help but, if not done right and if dev keep changing the API or tags then Automation takes as much time to maintain as it does to manually test. I’ve seen both usefull and not usefull automation efforts. But I’ve yet to see highly effective automation: i.e. automation that prevents defects from escaping, and doesn’t take much maintenance.

May 28, 2015 at 12:32 pm #8259When applied correctly automation testing certainly should impact manual testing and in a positive way. As has already been mentioned automation should be able to check for regression issues with ease, this in theory should give a tester more time to be more creative in their testing. On top of this you should be able to make tools which support your testing, again allowing you to be more creative. I also think it can uncover unknowns if used correctly (for example using random/different data between test runs)

Frankly its tiring how often when automation is mentioned it becomes automation v manual. That isn’t how it works, you’re given something that needs tested, you choose the best means to test it.

May 29, 2015 at 10:58 am #8274I find automation good to perform Smoke Tests of a new build. Also, I find automation good to enter non-supported data in certain scenarios to see how robust the software is.

I agree with the sentiments above that automation cannot replace manual testing completely but it does act as a good base to see how stable a product is.

In the beginning of a software cycle, automation Is good for the monkey tests that Roman describes above.

As the cycle develops, automation is good to regress against features that are mature and are not expected to change.I think manual testing is always good where some thought is needed to go through a software flow, but once that flow is nailed, then automation is good to ensure that flow always works from then on

June 4, 2015 at 10:43 am #8322As Steve suggested, automation is checking. If so, is it a case that testing skills are lost if you concentrate too much on automation?

June 4, 2015 at 11:16 am #8324Daragh

That’s another open ended question that could be interpreted different ways.

‘concentrate too much on automation’ – Do you mean:

1- Automating the checks without deciding what they are; then your not testing – your automating checks and a programmer can do that without knowing how to test. I’ve set up a team like this in the past. With a dev dedicated to the test team to automate the checks and build the automation framework, whilst the testers got on with Testing.

2- Deciding what needs to be checked and automated; that’s a testers role. And in doing that you would perform some testing to investigate the product and then pick out of those tests the parts that are Checks to be automated. This doesn’t dull your testing skills, it frees up time on the next cycle to do the more testing while the checking you do regularly is automated/done for you.There have been discussions in the past about exploratory testing and I think most people will agree that most testers will perform exploratory testing during the test design phase. This is very much the same phylosophy. i.e. The exploratory tests during design will identify the checks that need to be automated. Then after the checks are automated the tester is free to test more, and to perform more exploratory tests.

Can Automation be to the detriment of the manual tester. Yes it can, and no it can enhance them. It depends on how the knife is wielded and how the who dev/test team is setup.

The understanding and approach of the managment is key, as it is to any test role.

June 4, 2015 at 11:19 am #8325Steve did suggest that but he also mentioned there was more to it, ‘Automation though can augment Manual testing.’ This suggests automation can be used for more beyond automated regression testing and can be leveraged to improve manual testing, for example by allowing me to jump to stage 19 of a 20 stage process by clicking a button rather than filling out the first 18 stages each time. More time for targeted manual testing enabled by automation.

As per usual it depends on context. I’ve seen the checking v testing argument used to say that automated testing can offer you nothing new about the system (from memory of the original article this is NOT the spirit it was written in.) I can write scripts to test/check assumptions you hadn’t considered – by definition of the original article this is still a check and I agree but to others who have thrown checking v testing about, its not something they’ve considered. They only automate what is already known, they do not consider the unknown. Automation when used correctly can offer a lot of value, moreso than most naysayers seem to think.

Automation is part of testing. Within automation there are also several parts, i.e. test toolsmith, automated regression testing, automated performance tests. Manual testing is also a part of testing, again there are several parts i.e. test plans/strategy, exploratory testing etc. At its core it just becomes a specialisation vs generalisation argument. If I work lots on automated regression suites and not much on manual exploratory sessions, my abilities with regard to manual exploratory sessions are likely to be worse than someone that spends 90% of their day doing that activity.

June 5, 2015 at 2:56 am #8329Nicholas said that when discussing automation vs. manual testing, it’s best to talk about what tool fits the purpose, not whether one or the other is best in all circumstances. That’s the background for his saying that it ultimately depends on context. Context — the architecture of the SUT (system under test), whether low-level design specs are available (whether ANY documentation is available), how you’ve organized your iterative processes (whether in Agile or waterfall), do you have competent automation engineers, how many times will the automation be used….

Customers (and our bosses, perhaps) are put off sometimes by a barrage of questions like this that we think are relevant. But we need the information to for our work breakdown structure or epic/sprint planning. I would love to have automation at every level, beginning with API-based unit tests (or behavior- or test-driven equivalents); plus automation for slightly more integrated tests within the functional domain of a single component; then automation for SIT (system integration testing). One major advantage of automation (as alluded in other posts in this thread) is that if a developer injects a fault at a late stage that is discovered by exploratory testing but not by automation, you can begin your inquiries in areas not covered by automation — assuming that you know what is and isn’t covered by your automation. Usually this is not possible because it is considered too expensive. How much are you willing to spend — how much do you need to spend — on confidence:?

Not everything we call automation is worth the investment. Automating 500 tests doesn’t mean that you’re achieving 10 times the coverage that automating 50 tests would provide. Those are just numbers. Those 50 tests could be core go/no-go quality gate triggers. All 500 of the other tests could boil down to 2 tests, with 498 equivalence variations. Too many of us believe that numbers and metrics carry intrinsic meaning; they may have meaning, but only in context.

You need to prioritize what you cover, and throw out any tests whose failure will not be regarded as significant enough to pay an $85/hr developer to fix. Has the code been developed with attention to the ease of testing? Just as some tests cannot be done without automation, other tests are too difficult (expensive) to automate to justify automation.

Such caveats go with manual testing as well. Who’s doing the testing? “Oh, she’s only a manual tester.” Well — what kind of manual tester? It’s all about what you know, and what you can do with that knowledge. Some of the best testers I’ve had in my teams have had production-level development skills, but they apply that knowledge to figuring out where vulnerabilities are likely to be found in end-to-end scenarios. Many years ago, when Microsoft began taking secure code seriously, their best tester (i.e., found more bugs than anyone else, with the highest percentage of high severity bugs) did all of his testing manually, but only insofar as he walked through the code to spot likely vulnerabilities, after which he would figure out what tests would prove the case, which he would execute manually.

But the original topic here was how does automatic testing affect manual testing. Many have already said that it gives manual testers time to be creative, to look at problems that have not been thought about. Absolutely the best reason for automation, aside from relieving us of sleep-inducing boredom. For some kinds of automation — again, context is everything — the process itself of developing automation is extremely effective in teaching testers how the SUT operates. Someone mentioned monkey testing. A guy that was instrumental in defining the limits of monkey testing used to work for me. His ability to find bugs by manual testing was greatly improved by figuring out when was the best time in the SDLC for using monkey testing; when were its results “actionable”, i.e. when did monkey testing generate data that could help developers improve code hardening and when was it useless, and so on.

ON the negative side, I’ve seen that some people focus entirely on automation, and lose the ability to identify with the end-user. Does that matter for building API-based automation? Probably not, if the scope of one’s activities were entirely restricted to API-level transactions and data transformations. But someone needs to monitor the interface between UI and middleware – I would much rather that person was as interested in user experience as in the efficiency of algorithms.

June 8, 2015 at 12:06 pm #83681) Not everything can be automated. Period.

2) Not everything which can in fact be automated should be automated due to effort outweighing the value in doing so.

3) Automated tests can be brittle and fall over easily.

4) The maintenance of automated tests can quickly become a burden.

5) Automated tests can raise the question whether something is true to live or not (particularly when using canned data).

6) Blindly trying to automate everything in sight is ill advised.

7) Manual testing has proven time and again that it identifies issues an automated solution could not possibly hope to find.

8) Automation should look to augment manual test effort. It is not a replacment.

9) Automation can prove expensive versus a Manual test approach. Not just in monetary terms.

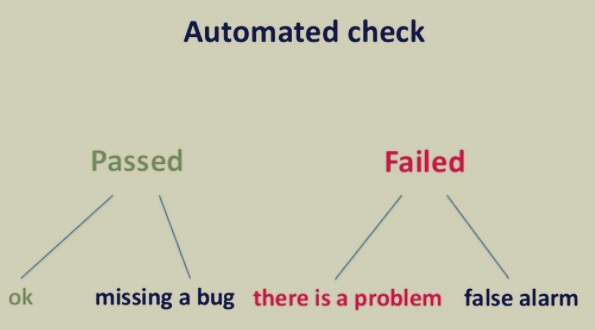

10) Automated tests can give false positives. Do you really know what it is verifying and what it isn’t?June 8, 2015 at 2:32 pm #8370June 15, 2015 at 12:23 pm #8439Padmaraj, I like your mind map but it applies equally to manual testing.

Tests – whether automated or manual – can also have defects. Some test defects cause false alarms. Normally the tester will spot this quickly and correct the test. Sometimes it is necessary to refer back to an analyst to ensure that the tester has the correct understanding of the current requirements.

Other test defects will cause bugs to be missed. Usually because the test fails to exercise the buggy code. Occasionally the test and the code have the same error. This is the category where an alert manual tester can pick up the bug despite the apparent pass. However manual testers can and do miss these errors.

June 17, 2015 at 9:36 am #8494If I repeat a test 5 times manually, I automate it!!!

Test automation reduces manual regression effort. It helps Manual testing in sense of allowing more time to exploratory testing.Test automation works above Human beliefs – Beliefs on things which work most of the time will work all the time. Beliefs on things which broke once could brake again.

But, Manual testing is not replaceable because Robot can not replace Human. It just helps.

August 3, 2017 at 9:19 am #17004Some great thoughts here. It has been discussed a lot recently and I think that automation is starting to have a bigger impact on manual testing but it about maintaining your own skills in many ways.

-

AuthorPosts

- You must be logged in to reply to this topic.