When I started in my current job last year, it brought positive changes and new challenges. I went from testing big and complex bank and financial systems, to testing apps for the retail marked. Not only had I never tested mobile-based applications before, but it was also the first time I joined a project from its very beginning. To me that sounded exciting and from what little I knew about the project, it didn’t sound too difficult. How hard can it be to test an app on a mobile, right? It turned out to be the most complex project I have participated in in the three years I have worked with testing. This was suppose to be an agile project; but agile was not done as it should. I know that I am not the only person that has experienced a project that doesn’t go as planned. Test unfortunately always comes last and we are always pressed for time. So this time we had to think differently on how we could test as much as possible within a short period of time. So here is a guide on what to do when there isn’t enough time for testing:

The Project

The customer was a big retailer with over 500 stores in Norway. The goal was to make the merchant’s day easier when it came to employing new people, set up the shift plan and manage salary and other expenses. With that in mind, different suppliers made five applications.

My employees:

1. This app handles new employments; changes salary and position type, and registers emergency contacts etc. In short, a HR system in one app. This app is available on iOS and there is a HTML client as well.

Shift planning:

2. The main goal with this app is an easy way to plan the stores shift plan. Here a merchant registers all vacations and sick days as well. In the end of the month, he also handles salary. This application is currently available on HTML, and is being developed to be available on iOS.

Time registration:

3. Employees use this app in the store itself when they come to work. An IPad hangs on a wall, an employee simply clicks on a picture of himself to clock in and out and to take a break. The app is mainly used on an IPad, but works for all browsers, HTML, iOS and Android.

Time acceptance:

4. Based on the planned shifts, the employee’s clocking, and if they came late or worked extra, a deviation is generated. A merchant has an app he uses to handle these deviations; he can chose to pay or not to pay for the deviations with a simple swipe to the left or the right. This app is developed for iOS and Android.

Employee app:

5. The last app is for the employees. Their shift plan is showing and they can send messages to each other and to the merchant. They can also apply for a vacation and other absences. This app is available on iOS and Android.

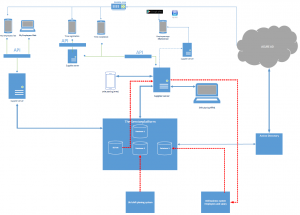

This is the short version; the applications are a lot more complex, but for the purpose of this article it is all you need to know. As you may have noticed, these apps were developed to work on multiple platforms. HTML, iOS and Android had to be tested, and browsers like IE11, Chrome and Safari needed to be tested as well. However, that was not the hard part, the hard part was the integration testing.

App 1 – My Employees only spoke to app nr 2 – Shift planning, and app nr 2 spoke to all of the other apps. App 3 – time registration spoke to app 2 – Shift planning and 4 – time acceptance, and finally app 5 – Employees app spoke to app nr 2 – shift planning. To make this happen, there is a net of cloud based servers and a service platform that distributes information. The five applications, and the service platform were delivered by three main suppliers. In addition, all of them had different operations suppliers. So in total, six suppliers and the customers internal systems and servers had to work together to make the applications work as one, the project in total included almost 50 people.

This was our challenge, two testers, and one test manager, against six development teams. We were not too worried though, it was the middle of August and the pilot started in the middle of October. This was an agile project and development had planned their sprints to be ready in time. So our planning began, but when it was our turn to start, development failed to deliver on time. All right, we thought, this can happen to everybody. Therefor we re-planned, again development did not deliver on time, and so this dance continued until it were four weeks to the planned pilot date. At this point we started to be quite concerned. Up to this point the only thing we had been able to test was some separate functionality in the different applications and only 19 bugs had been reported, a complete integration test was not executed.

All our training, all our theory that teaches us how to successfully execute a test, didn’t work. Yes, we planned our sprints so they would match with the developments plan, we wrote descriptions and made risk assessment for each PBI (product backlog item). We wrote test cases, got the test environment up and running, and we reorganized when the development sprints weren’t finished in time. When we came to the point where there were four weeks left, we knew that we couldn’t follow the sprints anymore, we didn’t have time to run every test case and document every step, so what could we do now?

We threw everything called routine and by the books out the window, thought outside the box and got to the finish line on time.

How we did it

1. Walkthrough of requirement for each of the application and the service platform.

2. Risk analysis

3. Prioritizing

4. Testing board

5. Exploratory testing

6. Report bugs

Walkthrough

1. We started with walkthrough of the requirements what we had, not everything was documented. Instead of writing test cases and tasks we used post it notes. Yes, you read correctly, no computers, no planning systems like Jira, ALM or TFS, just post it notes where we wrote down all of the requirements.

Risk Analysis

2. After that we sorted them after GO ore NO GO criteria as a risk analysis.

Example one:

Requirement: As a merchant, I want to be able to log in.

NO GO: If a merchant can’t log in, we can’t start the pilot.

This is an example of a requirement that had to be met, otherwise we couldn’t go live.

Example two:

Requirement: As a merchant, I want to be able to add an emergency contact for an employee

GO: Yes, if it doesn’t work this is a bug, but it is not a bug that will stop basic, end-to-end functionality in the system. We can live with it in the pilot.

Everything related to integration had GO and NO GO criteria’s as well. When it came to the testing, we focused on the requirement that would affect the merchant directly if they were to fail.

Prioritizing

3. In step three we prioritized, we asked ourselves “which requirements have to be met for the basic functionality to work? “ The NO GO criteria’s were the ones that we started with, and after that, our focus was on the GO criteria’s.

Testing Board

4. We made a board where each of the applications got their own column, and set up the post it notes where they belonged.

Exploratory Testing

5. At this point we were ready to start the test. We used boundary value analysis to test the time registration app, and exploratory testing for the remaining applications, the NO GO criteria were first in line to be tested. When a requirement was tested, it got a smaller post it note that said OK ore NOT OK.

Report Bugs

6. The bugs were documented with screenshots and steps in TFS (Team Foundation Server), and we had bug board meetings every morning with a representative from each of the suppliers to prioritize, and identify bugs that needed to be fixed before others.

In addition, there were stand up meetings with all testers, team leads from development and the costumer for a daily status on progress. You might think that steps 1 – 4 took a long time but it didn’t, in two day’s everything was ready. Exploratory testing turned out to be a very effective test technique in this case, since we did not have a complete set of requirement and acceptance criteria. We found many functional bugs, and bugs related to integration that were not specified in the requirements. In total, it resulted in 249 bugs in 3 and a half weeks.

Those of you that have used agile processes in your testing might have noticed that we picked out many of the qualities an agile test has. Risk analysis, stand up and bug board being among them. However, we did not follow the agile process when it came to the test itself and sprints.

Lessons learned

I have learned a lot from this project, and here are some of the points that I will find useful in the future, that might also help you:

– Do not over plan a sprint: Only include items that you know you can finish, overestimating a sprint will only lead to complications, overtime and a lot of re- planning.

– Have a good dialog with other team leads: On a big project like this one there were four team leads that were dependent of each other up to a certain point. With good dialogue, it is easier to know what the other teams need from your team, and it will make it easier to plan your sprint.

– Protect your team: It was easy to say yes to a developer if he needed help to find the source of a bug, and it was also easy to go to them if I needed information from the database for example. But that resulted in not using our time on what we needed to get finished, the sprints. We all want to help, but it is important that team lead’s set clear rules that everything goes through them, and not individual testers or developers.

– Never underestimate a regression test: Regression test is not the most exciting thing a tester does, but it has to be done. Just when we thought the solution started to be stable enough it started be unstable. Therefore, we ran a regression test and found almost 40 bugs in one day. Regression test needs to be run regularly, had we done it more often, we might not have had the setback we had.

When I look back there is one thing I can pinpoint that made the whole process difficult for both developers and testers, and that is the lack of requirements and unfinished requirements. It may seem that I have blamed the developers on what went wrong but that is not the case, they worked just as hard as we did. It is hard to know what you are going to develop when you do not have the complete description.

There Isn’t Enough Time for Testing..Or is there

This article may not be what you expected, but minimizing the use of technology actually saved us a great deal of time, and making the decision to test freely without steps from a test case resulted in finding bugs faster. ISTQB and agile testing are out bibles, our guides to success; however, that does not mean that we have to follow them blindly.

So when there isn’t enough time for testing, there are other ways to succeed; you just have to think outside the box.