Many wonder, why do we require multiple environments like Development, Test and Production? How much money did the test environment cost? What is the ROI of the test environment? The answer to these questions surely depends on whether we allowed users to be down, during business hours because a change did not work as intended. Downtime for a business critical system can have significant cost to the business. Unfortunately calculating how much money the company could potentially save through having a separate test environment is a much bigger challenge.

Test environment is where we can try out experiments in a relatively safe manner. If some of those experiments have adverse impacts to the software and/or environments, good news is that we found problems in the Test environment and hence by fixing it, we are avoiding any downtime in production stage. A test environment will serve multiple audiences and hence would require variety of skill sets and experience to build and manage it.

So the question is what tactics/approaches can we use to help team members understand the importance of a well maintained Test environment? Often these environments are loosely managed resulting in utilization conflicts and schedule delays. There is every need to adopt good practices and implement process to overcome these pitfalls. As said by new CEO of Microsoft- “Ours is not an industry that respects tradition-it only respects innovation”. And this is very much applicable to Test Environment Management. Good test environment management improves quality, availability and efficiency of test environments to meet milestones and ultimately reduce time-to-market and costs.

In this blog post, I will share my experience of improving test environment management while working at Dell Computers. I will highlight and share the efficient practices and processes that were implemented, to underline importance of good test environments.

What people will get:

- Understand the key challenges in environment management

- Gain ideas of good practices that may help

- See the value of good environments for quality-time to deliver

A typical day in a software project team ends up with various expressions as:

Tester – “Only yesterday/couple of hours back this environment worked perfectly fine, and suddenly…”

Developer – “Configuration looks exactly the same – I haven’t missed anything in the package and it works in the development environment. So where is the problem?”

Test Environment Team – “Deployment Instructions were not clear. Software installation missed a certain component … application/environment is not accessible, Testing down time, got to roll back to the previous good version and work with Developer”

Project Manager – “Oh, downtime again, testers are sitting idle. Don’t understand why environment always has issues”

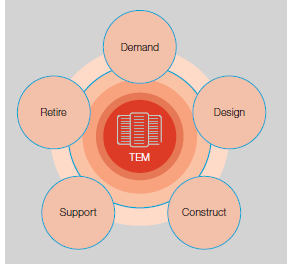

This is no different in a company like Dell computers where I was leading a Test Environment Management (TEM) team. It is said that Dell spends near about $8 billion to set up an E2E test environment and around $8 million per annum to maintain and manage it. A typical lifecycle of TEM starts from procuring the demand or requirements just like the normal SDLC. Following figure explains the life cycle of TEM:

The complexity of the test applications landscape, the number of applications under scope, and the integration architecture and technology variants can all increase the challenges of managing software test environments. Including maintaining complex hardware and software deployments, documenting and defining a standard provisioning process, and providing general housekeeping practices. Some of many key challenges we always face are:

Instable and Late Delivery of environment: Test Environments availability and stability is just as critical as the production environments. Too many environments or too less environments is a big problem. This issue arises due to non-engagement of TEM teams early in the discussions in the Program/Project meetings. Late engagement and late requests often end up with non availability of test environments. Unstable environments can potentially derail overall release process as frequent changes to the software environments can delay the overall release cycle and test timelines.

Excessive Testing cost: Service operation process in test environments are less defined and are not considered as important due to the fact it is not production and has no direct visible ROI. Hence, there is every scope that proper discipline in securing the environments specially avoiding unauthorized access is unavoidable and hence uninformed changes to the environment which further leads to downtime. This result in delay in production go live dates which can be very costly.

Consistency: With teams spread across the globe, development and integration is managed by different teams across and around the clock. It becomes all the more difficult to have consistency in the code that is being developed especially if there are 1000s of applications which are categorized as globalized and regional products. Apart from this, the fact that the test environment is not representative of the production environment. Hence question arises:

– Are the test results reliable?

– Functional and non functional aspects?

Good practices adopted by Dell

Now having known the issues in test environment, how are we going to handle them? What are we going to do differently? As we know that “Ours is not an industry that respects tradition-it only respects innovation”. We got to improvise process with some innovative ideas. We have to introduce new techniques to overcome the issues.

With development and testing teams spread across the globe, there is an every need to coordinate geographically dispersed operations and cross-cultural workforce. This leads to the need of having diverse teams on board. If differing views can be combined in problem-solving or decision-making, the outcome is usually better than that of a single view and that is the synergy of working with diverse groups. However, since there is no apparent ROI from the test environments, it can be bit hard to drive these constructive inputs and innovative ideas. It needs a proper and firm leadership and hence would need management innovation too.

So what did Dell TEM team do?

Rigorous attempts were made by the TEM to explain the importance of engaging TEMs team right from the inception of the project/program. It emphasized the merits of having the TEMs team on board early which enables them to know the roadmap.

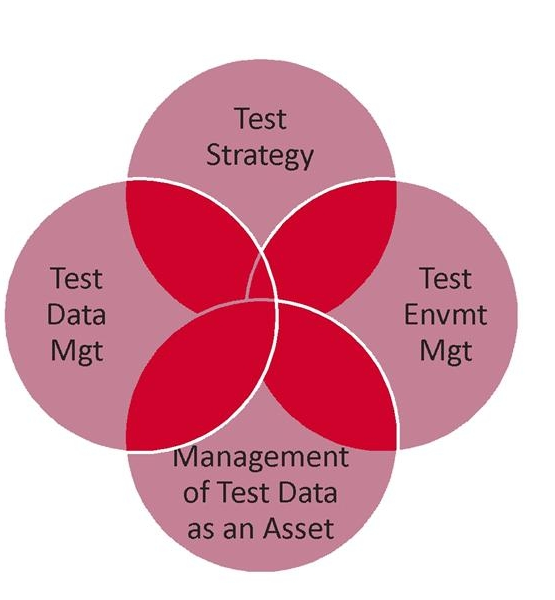

To resolve existing issues TEMs introduced a practice of formalizing the test environment requirements gathering in a document and produced generic test environment strategy template. Stake holders reviewed and signed off. This is a new process formulated to engage test environment team early in the SDLC. This document enabled us to capture requirements and allocate suitable resources with matching skill set, plan and allocate the existing environment if feasible or build new environments by procuring the required infrastructure, agree upon the test data requirements and if required plan for a data refresh etc.

This requirements gathering document also known as Test Environment Strategy document also helps the Test Environments Decision (TED) team to approve or reject any proposal from the program management on the utilization of the available test environments. Depending on the roadmap, availability, program demand and requirement such decisions were made. TED team analyses the request from program management and accordingly takes the decision.

This document has now become a part and parcel of the set of documents that needs to be produced and maintained in the program/project delivery. All this lead to an increased confidence on the consistency of the test environments and also addressed the issue of late delivery of the environments to a greater extent.

Next big issue is unplanned deployment of applications into the environment. How to tackle this as we have teams spread across the globe and teams working round the clock? How to track which application has got a new code in the environment which has caused the issue? TEM team has introduced automated deployment tool. This tool enables developers to raise a deployment request with all the deployment instructions, package details along with the version and source from where the code can be picked up, servers on which the deployment has to be carried out, pre-requisite details, reasons for the deployment along with the defects fixed or features added. Application deployment is not done until a representative from the test team authorizes and approves the request. This way, we are keeping the test team aware that there is a change coming up and see that the team is ready to test the changes. This also enables only authorized changes to happen in the test environment and hence it is more controlled easily traceable. These requests were never marked as Completed until a quick smoke test confirmation is obtained from the test team to see that the new change has not broken the environment. Any discrepancy noted is immediately notified to the development team and a fix is implemented by rolling out the changes done or corrected if is just some minor additions from the development support. All these changes are then captured in the release document to see that such incidents are avoided in future deployments. For critical programs, smoke tests were automated so as to run the same by the TEM team by themselves without depending on the testing teams.

End-To-End Monitoring

How about the server or web services availability? Can we ensure that they are available 24×7? The answer is NO. Then how to identify which server or service is down given the fact that we got 1000s of servers and services to support so many applications globally. There should be a smarter way to identify their availability quickly and easily so as to minimize the impact and down time. Dell TEM team in liaison with Empirix monitors team and has configured all the servers, services, components, application URLs for monitoring. This way, TEM is able to proactively detect issues that could impact testing teams. This enabled comprehensive, end-to-end monitoring of multi-service, heterogeneous network environments which speeds up troubleshooting and significantly reduces operating costs. Now having known that there is a problem and a predictable down time, an incident report with all the details and the impact with ETA is raised and communicated to the concerned parties. This report is updated in timely manner with any actions taken to resolve the issue. This report also lists out the contact details of the Server Management team or Middleware or Web methods administrators or dBAs with whom TEM has liaison to resolve the issue. This enables the global TEM team to work on the issue during odd hours.

Above two measures definitely helped to bring down the down time of the environments and also a proper traction is now in place.

Cost of Testing

Another issue that we mentioned earlier is about the ‘excessive Testing costs’. How did Dell TEM team address this? With Test Environment Strategy document in place, we knew the roadmap and hence talks were initiated between different project/program managers to see how efficiently and effectively the teams can share the same environment at the same time. Proper scheduling of the environments with enough buffer time is planned well in advance. Apart from that, wherever feasible, development teams were encouraged to utilize Virtual Machines against physical servers to cut down the costs. Optimization techniques were used and existing applications were moved to the virtual machines and unwanted physical servers were decommissioned. This has significantly reduced the operating costs and also the servers to be monitored.

So having got some control and improvement in the service, remember it is never 100% control over these environments because of their fragile nature, question now arises is what next? We cannot just sit back and say the present processes are giving positive outcome so it will always give the same result. We need to continuously improvise the process, implement innovative ideas with great drive. Work on the skill set and knowledge and be adoptive to the changes. This is exactly what Dell TEM is following and seeing some good results. I like the below quote and believe in that: