The Innovation Dilemma

Have you thought about a test data management strategy? Across all industries, speed is a critical business imperative. And now more than ever, applications are at the centre of this race. More and more companies are becoming software companies, tying their hopes of differentiating themselves in the marketplace to the applications that they deliver to their customers, partners, and employees.

Consider GE, for instance, which invested $1 billion in a software center of excellence, known as GE Digital, in order to redefine itself as both a platform and an application company. Or Walt Disney Parks and Resorts, which created the RFID-enabled MagicBand that serves as an all-in-one entry ticket, hotel room key, and payment system. Looking back at the first half of 2016, more people use mobile or online banking applications than go to their local branch. As Marc Andreessen predicted in his WSJ article titled “Why Software Is Eating the World” in 2011, the trend towards software has become ubiquitous (and this reference almost even cliché).

A byproduct of this trend is a surge in the demands for data, which is both the output and the fuel for applications. But as applications generate more and more data, it is becoming increasingly difficult and costly to manage. For every terabyte of data growth in production, for instance, there are ten terabytes spawned for development, testing, and other non-production use cases. Amidst this massive data proliferation across storage and servers, CIOs are ultimately asking themselves, “How do I bring 2x more products and services to market this year without increasing my costs?”

Importance of a Test Data Management Strategy

When it comes to testing software applications, almost everyone has a “data problem.” Software development teams often lack access to the test data they need, driving the need for better test data management (TDM).

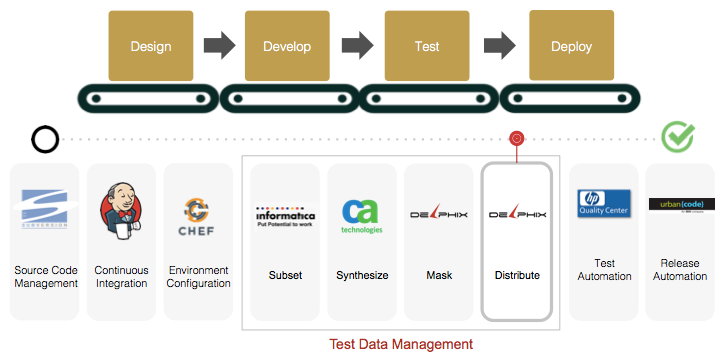

Historically, application teams manufactured data for development and testing in a siloed, unstructured fashion. As the volume of application projects has increased, many large IT organizations have recognized the opportunity to gain economies of scale through centralization. After observing large efficiency gains as the result of such initiatives, many IT teams have expanded the scope of TDM to include the use of synthetic data generation tools, subsetting, and most recently, masking to manipulate production data.

But large IT enterprises still can’t move fast enough. Why?

A New Set of Challenges

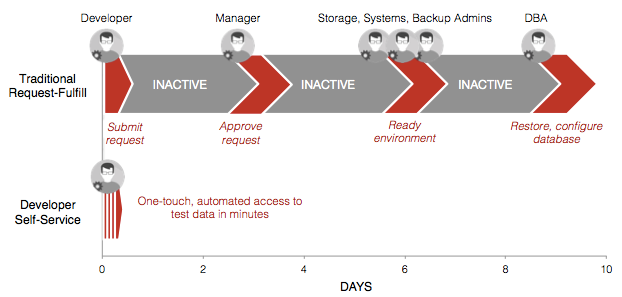

The rise of new software development methodologies demanding faster, more iterative release cycles has created a new set of challenges. Most notably, creating a test environment with the appropriate test data is a slow, manual, and high-touch process. Many organizations still use a request-fulfill model, in which ticket requests are often delayed or unfulfilled. Copying a dataset can take days or weeks and involve multiple handoffs between teams, creating a fundamental process bottleneck. Every element in the software delivery pipeline has been automated, it seems, except data distribution––crippling the speed at which application projects can progress.

Before Delphix, everything had been automated except data distribution.

Bugs still cost the application economy billions of dollars per year. Part of the reason is that software development teams don’t have access to the right test data. Size, timeliness, and type of test data (be it production, masked, or synthetic) are all crucial design factors that all too often suffer at the expense of saving compute, storage, and time.

Another challenge is data security. With the recent spike in the number of data breaches, data masking has certainly begun to catch on, but not without adding friction to application development. End-to-end masking processes can ultimately take days, if not weeks, putting a damper on release timing.

Lastly, test data proliferation and resulting storage costs are continually on the rise. Yet despite efforts to improve data reusability, the needs of application teams are still largely underserved.

The number of TDM products in the market continues to expand––namely, to near-neighbor tools, like data archiving, ETL, and hardware-based disk storage volume replication tools––to fill the gap in the market. However, there is still a glaring unmet need, and the greatest productivity benefits remain largely untapped.

An Emerging Approach

Advanced TDM teams are looking to new technologies to make the right test data available securely, quickly, and easily without breaking the bank. While there is no single tool that comprises all of TDM, one thing is for certain: at least one element is missing.

Most often, the missing piece of the puzzle is data virtualization. Unlike physical data, “virtual data” can be rapidly provisioned in minutes, making it easy to distribute test data to application teams. And unlike storage replication tools, data virtualization also equips end users with self service data controls so that software testing can be performed iteratively without any unnecessary delays.

A traditional request-fulfill model takes days or weeks with multiple handoffs. Self-service data is accessible in minutes.

Virtual data also comes with powerful data control features. It can be bookmarked and quickly reset, branched to test code changes in isolation, and rolled forward or backward to any point in time to facilitate integration testing—all of which can lead to massive software quality improvements, and ultimately, a better customer experience.

Advanced IT organizations are taking TDM one step further with integrated data masking and distribution. Administrators can not only deliver virtual data to application teams in minutes, but also execute repeatable masking algorithms to streamline the handoff from Ops to Dev.

Last but not least, virtual data—which can be a copy of any data stored in a relational database or file system—shares common data blocks across copies, and as a result, occupies 1/10th the space of physical data. For businesses that are growing rapidly, the ability to flatline infrastructure support costs can have a transformative impact on not only the bottom line, but also the top line as those savings are reinvested to more strategic growth initiatives.

Better TDM means faster delivery of apps, and ultimately, faster achievement of business objectives. Companies that fail to invest in TDM put themselves at risk of falling behind.

Make test data management strategy a route to success, not your downfall.