When I went to the job interview for my currently position, the interviewer kept saying that I was going to become a tester/test manager in a project – and not for a product. I must admit that I didn’t pay much attention to that; when you have been working with Quality Assurance and test for more than a decade in as different areas as Fashion and Content Management for Tools and Groceries, you have learnt that applications are applications – no matter the business they relate to. So I my head I just heard this as potatoes-potatoes.

I got the job, and the days showed me the differences and the benefits. Below I am going to describe both situations and the differences inherent in moving from a project to a product perspective.

When I joined, the situation was that there was one major customer on the project. Having almost exclusively one costumer gives you the option to please in a degree I have never tried before. And unfortunately I properly never will try it again. You never needed to have any serious doubt about anything in relation to how the application was supposed to work. Of course we had specifications, contracts and so forth, but in any case of doubt you just picked up the phone and asked the costumer directly. And that also went the other way. Everything in the everyday was very easy going an informal.

But all coins have a back side as well. The paying costumer naturally decided what to include in the software they paid for. And that also indirectly dictated the quality. You can design a feature in several ways. Maintainability and scalability includes things in product world that seems less important when you are working in a one customer project. Here you would often put your focus on the functionality – and leave ideas about making future maintenance easier and coping with e.g. changed environment behind.

After the arrival of new customers and promising future opportunities, we are now going to leave the life in the project world and become a product – taking and giving the benefits that come along.

In the project we had this:

- The delivered application should not work per se. It should be testable.

- Waterfall as development method (maybe leaning on cowboy-coding)

- Application only grew by costumer paid features

- GUI: The Customer more or less decided all labels, tooltips etc.

- Manuel Test: Costumer data was the best.

- Unit Test: Again costumer data is the best

- Automated GUI test: and once again – costumer data is the best

The phrase “The road to hell is paved with good intentions” were in the back of our heads when we decided what steps we would like to start with. We have really tried to keep our eyes at the goal and not spend time on nice Quality Assurance stuff which – observed in isolations – seemed fine. Any unique product needs its own combination of quality assurance and it surely also change over the product lifetime. So at the moment we have decided to go for:

- Quality Assured deliveries – using ISTQB and TMap® Next

- Best test data: Created by equivalence-,and border analysis etc

- Development method: SCRUM

- Single main line

- Controlling feature delivery with Feature Toggling

- Controlling QA with at deployment pipeline including unit, end-to-end, and manual tests

Quality Assured deliveries – using ISTQB and TMap® Next

I am a strong believer of the Agile Manifesto – and I read the part “Working software over comprehensive documentation” as documentation needed – just don’t do documentation that is not needed or maintained. In our case the documentation (and processes) is now made as a mixture of the ISTQB and TMap® Next (from Sogeti).

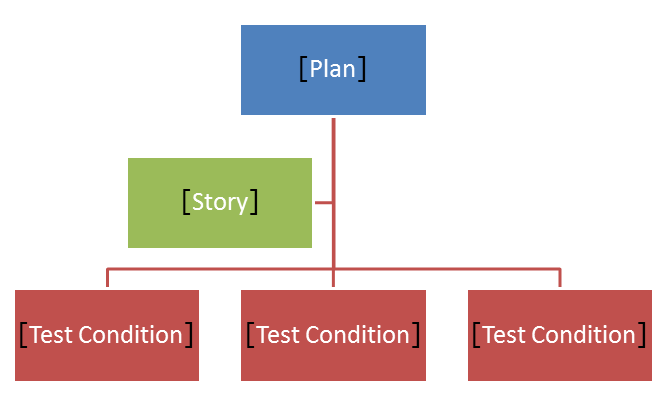

Figure: Comprehensive documentation: Stories get corresponding test stories that are split into test task that again holds logical test cases along with equivalent classes and border analysis to use as parameters for the test. No fully test cases are written.Above all we have a test policy and all larger new features have a test plan that correspond to the test policy. We use SCRUM as our development method and from that we get the features explained as stories. Stories get corresponding test stories that are split into test task which again holds logical test cases along with equivalent classes and border analysis to use as parameters for the test. No fully test cases are written. This is making the maintainability of documentation feasible – though it requires quite high domain knowledge to the tester. The main future goal is also only to manually test what is left behind by the unit- and end-to-end-test.

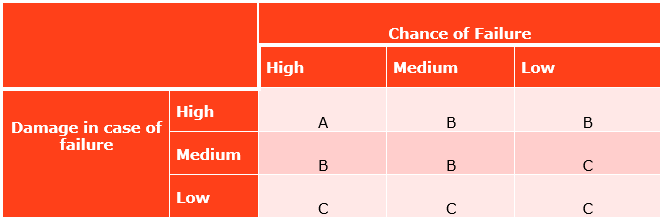

From TMap® Next we have adopted the Product Risk Analysis tool (PRA). We want to test smarter – not harder. And when you can’t test everything, the risk based approach is the only way to go.

The product risks are determined in cooperation with the client and the other parties involved. The Product Risk Analyses (PRA) is comprised of two steps: Make an inventory of the risks that are of interest and classify the risks. During the risk assessment the test goals are formulated. The extent of the risk is dependent on the chance of failure (how big the chance is that it goes wrong?) and it depends on the damage for the organization if it actually occurs. The risk determines the thoroughness of the test. Risk class A is the highest risk and C is the lowest. The test is subsequently focused on covering the risks with the highest risk as early as possible in the test project.

Figure: Product Risk Analysis – Finding the risk class:Product Risk = Chance of failure x Damage

Where Chance of failure = Chance of defect x Frequency of use

The manual test is also combined with bug hunts and exploratory testing.

Feature Toggling

We are working forward towards modularization of the code, but with a legacy codebase of more than half a million lines that is not a job, that is done overnight.

Our legacy code is in no way designed to take costumer specific features into account. To meet this challenge we have chosen to feature toggle a lot of functionality. We use “feature toggling” in the sense that we only have the limitation in features via the user-interface of the application – everything is always included anyway – just not all visible to all customers. But this adds yet another factor to the matrices of possible setups.

At the moment we place the highest priority on having a standard system which gets most of the Quality Assurance attention, even though no customers are running the standard system. The next level of attention goes to the costumer specific setup. But total test is no longer done here. The test is limited to where there are differences from the standard setup. This way of working takes a lot more resources and requires a significant amount of documentations compared to what we are used to, but until we have fully modularized solution we see this as the only way. Disregarding the fact of the Quality Assurance burdening pr costumer is rising every time we get a new costumer on board or we are adding a new feature to be tested with the different setups.

”End-to-end test”

Until recently, we had automated GUI test. It was very huge and it really covered a lot of the application. Pahaps a lillte to much: The test tried to cover way to many details that should have been tested on unittesttest level. It came more and more unstable and hard to maintain as the lines of code where growing. A change in personnel and almost no documentation of the test design made the maintainability almost impossible. Having more than one major costumer and a new applications server just killed the automated GUI test. Instead we are going for an automated end-to-end test in the code layers just before the GUI. The test doesn’t execute through the GUI, but can be described as end-to-end because it still holds the “contract” as if you had the GUI included in the test.

To make this come easy you need to design your code to become “testable”. The development department is struggling to get everything in line with model-view-control pattern where you separate GUI logic from the presentation. The architecture is also designed so that you – if you should want to in the future – can combine with GUI test, using the already designed test. To do this you need to a “runner for the GUI”. The benefit of this way forward is that you can test both with and without the GUI – with the same tests, which will have effect on the execution time and the feedback cycle.

In some cases (mainly because of legacy-code that aren’t true to the model-view-control pattern) it is difficult to implement the test. In cases like that we try to make server calls that simulate the client.

It is the developer’s responsibility that the code works as they think it is intended when they design the unit- and integration test. When we move to the top level of the v-model the testers take over the responsibility: The testers specify the test – and the developers only implement it.

Continuous Integration

In the past the one major costumer had a very strong influence on the release cycle and when new features were added. To meet this we used Feature Branches. Almost every new feature was developed in an isolated branch and where then afterwards merged to one or sometimes several release branches. It takes no math gene to calculate that this calls for a lot of test. When we had the automated GUI test, this was in some way feasible. But when the automated GUI test died, this was no longer a realistic way of working (Please also remember the matrices of setups growing when we move from a single customer setup to a multicostumer setup).

In the everyday work this means that all new features are now a developed within a shared mainline. The developers are all running working copies of the mainline and several times a day they merge their commits to the mainline. On the mainline we then run automated unit- and “end-to-end”-test along with the manual testing.

The test is driven and maintained by the developers. And that is also new to us. In the future the test will be maintained along with the code. As a natural part of the development.

The end-to-end test is also run as a part of a build pipeline. The pipeline is running every time a commit is made to the repository. And every run is seen as a potential release candidate – and it is the pipelines job to dismiss candidates before they even land in the Quality Assurance department. The pipeline currently has the following steps: build/unittest – end-to-end test – manual test – release (make the final distribution and place on the FTP). Therefore a commit that can’t execute the entire end to end test will never make it to a manual test, and can therefore not be released.

Figure: A typical continuous integration cycle: “Continuous Integration (CI) is a software development practice where members of a team integrate their work frequently, usually each person integrates at least daily – leading to multiple integrations per day. Each integration is verified by an automated build (including test) to detect integration errors as quickly as possible. Many teams find that this approach leads to significantly reduced integration problems and allows a team to develop cohesive software more rapidly.” (Martin Fowler, http://martinfowler.com/articles/continuousIntegration.html)

Test farm: VMware

When you are having only one major costumer there can be almost no discussion about the ideal test data. It should mirror costumer data – and the same goes for the setup in general. In our case we didn’t go all the way here. We had something which was deemed to be “close enough”. The costumer was running a clustered windows environment, but they had accepted that we did the test on single Solaris servers (The client was running on windows pc´s – with no official demands for test versions)

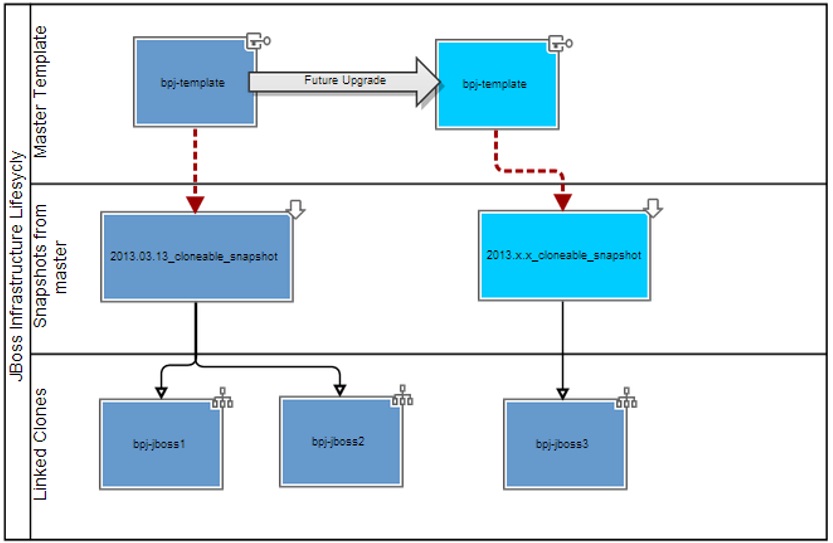

Now we are running Jboss on Linux. Using VMware. And test data is mainly created by considering equivalence classes and border analysis. Combined this with the insight knowledge we have learned from testing with costumer data.

The VMware is giving us the option of almost unlimited test environment and the option to swap between setups in no time. In our earlier setup with few physically machines this was not an option. And now we can save snapshots of every deliver. Before we had to choose what was important to save.

Figure: It’s important to use the correct snapshot before beginning a cloning process. Each snapshot represents a different state of the SOURCEVM (the VM from which a new clone is made) and might not always match each and every possible Bookplan release. Therefore, the Snapshot description field is used to store the supported Bookplan Version / Branch information which is updated manually along the release process.

Leaderships/management challenges.

It is not easy to change the way people have been working for years just by sending out an email. And we have never expected it to happen like that. But we have spent quite a lot of time discussing how to move forward. In general it was easy to describe what we wanted – and but hard to get it done.

As a tester it was hard to trust that the developers took over some of the test design. And as a developer it was hard to accept that the design in general had to change be course of the test. We have taken the fights and surely we had to pick our battles. At the end of the day we all had to accept the art of opportunities – but with an intact team spirit that was crown from e.g. a newborn architecture/design group and SCRUM as a development process we made it come true.

Tomorrow’s Quality assurance

As I have tried to describe, we have been forced to make some milestone decisions. It is not hard to dream up a perfect product with a perfect Quality Assurance solution that matches it. The real challenge is to give the best possible Quality Assurance that match the product you have at any given moment. And that was a very important lesson learned. But together with the developers I have a dream about a modulated Bookplan product with fully implemented continuous integrations where any individual developer’s work is only a few hours away from the shared project state and can be integrated back in minutes. Any integration errors are found rapidly and will be fixed rapidly. Test design and Self-Testing is just a natural thing and it includes automated unit- and “end-to-end”-test (including all business logic which is now placed in the service layer) that covers as much as possible. Manuel test will then be reduced to small health checks, first-time test of new features and explorative testing combined with bug hunts. The responsibility of the quality assurance will then be spread across more people and again I can say one of my most loved sentences again: “Quality cannot be tested into products it has to be built in by design”.

Figure: We have been forced to make some milestone decisions. It is not hard to dream out a perfect product with a perfect Quality Assurance solution that matches it. The real challenge is to give the best possible Quality Assurance that match the product you have at any given moment. And that was a very important lesson learned.

The journey itself is also worth something – and even though you know you have far to go – you might be better of not knowing how far.

Sometimes the fastest way is not the best way to go to a destination. Sometimes you need to stop a take a look at the scenery to make sure you are going in the right direction. And maybe you even need to take a u-turn or ask for help to get back on track. The journey itself is also worth something. What you have leaned on your way can never be taken away from you again. And in the quality assurance world you are always working with a moving target.

I will end this paper with a quote from Adams Douglas and tell you all that I have enjoyed the journey so far.

“Space is big. You just won’t believe how vastly, hugely, mind-bogglingly big it is. I mean, you may think it’s a long way down the road to the chemist’s, but that’s just peanuts to space.”

― Douglas Adams, The Hitchhiker’s Guide to the Galaxy

About the Author:

Tina Knudsen has been working with Quality Assurance for more than a decade. She is employed as a Programme Test Manager and holds an Academy Profession Degree Program in Computer Science and several certifications (e.g. in ISTQB and ITIL).

Tina Knudsen has been working with Quality Assurance for more than a decade. She is employed as a Programme Test Manager and holds an Academy Profession Degree Program in Computer Science and several certifications (e.g. in ISTQB and ITIL).

Test has been the red tread trough all of her professional life. Already in high school she started with static test – as she was doing review for misspelling on the local newspaper. She has also tried electrical test of bare circuit board and other similar things.