I. Digital Disruption by Chat Bots

Chatbots are basically intuitive programs with a user interface for conversing and interacting with other programs and humans alike. There are thousands of chatbots built in the last few years, of which the most popular ones are Amazon’s Alexa, Apple’s Siri, Facebook Messenger, Google’s Allo etc. Superficially it might seem like a fun application for asking the application to text or call or play music but with machine learning and Natural Language Processing (NLP) chatbots are becoming more and more sophisticated and perform much more complex actions ranging from obtaining investment advice and requesting details on your insurance premium to booking an appointment with the doctor and even diagnosing medical condition. Over the last few years, they have become capable of having human like conversation rather than just responding to commands. Also with major software companies like Microsoft and Amazon providing dirt cheap Chatbot development platforms, the rate of creation and adoption of chatbots has exponentially increased among customers. Statistically speaking, by 2021 up to $4.5 Billion maybe invested in chatbots and personal assistants and by the year 2020, approximately 80% business may have implemented some form of chatbot automation. [1]

From the projected numbers, it is safe to infer that, while the mode of communications used by users may change considerably, if they were to switch from using the actual application’s UI to using a chatbot to interface with the actual application, it is not going to replace the actual application itself.

It is also safe to assume that, this paradigm shift may involve users accessing the Chatbot’s UI to communicate with various applications, i.e. Users start adopting a hybrid approach, which would infer that the user load on the chatbot server could be much more intense compared to a single isolated application. But on the plus side, the chatbot server may not have to perform complex querying or data processing functions because it need only interpret the request and route it to appropriate connected websites to deliver a response, received from the application and route it to the appropriate user.

Also since this is the nascent stage, the adoption of chatbots is only going to keep increasing, meaning; we could expect a signification increase in the user base in the coming times.

II. Myths Concerning Chatbots

While a chatbots are receiving a lot of buzz in current times, there are quite a few misconceptions surrounding chatbots. Let’s look at some of the popular Myths that people believe are true:

A. Chatbots are Human or Humanoid in Nature

While bots are evolving steadily, it is too early at this juncture to consider them comparable to humans. None of the chatbots have passed the Turing test yet. In fact, not all chatbots even use Artificial Intelligence (AI). A bot may perform actions accurately, if they can grasp the right action triggers and map it against the right intents. These bots could very well be data driven or key word driven and can respond to selective trigger words with an appropriate response.

B. Chatbots Can Replace Applications

No matter how well a bot holds a conversation with a human, when it comes to performing tasks, it can only perform tasks which the actual application which is running in the background, can. Chatbots are not substitutes for actual applications but an addendum to quickly and easily interact with the application.

C. Chatbots Interact Through Texts Alone

Contradictory to popular belief, chatbots are not just textual. If there is something enterprises like Amazon, Google and Apple have shown, from their applications like Alexa, Google Now and Siri respectively, it is that, chat can represent voice based interactions as well. Textual communication is but one form of communication for chatbots.

D. Chatbots are Customer Service Center Specific

While many believe that chatbots are just engaged to chat with customers to resolve common issues, the reach however is much deeper. Chatbots have already made their presence in the financial, automobile, social media sectors etc.

E. Chatbots are the Silver Bullets to your Business Problems

While chatbots do make interaction easier, it is not the one stop shop for all business problems. It can neither make humans redundant nor automate everything that the business does. It can only facilitate business services via the chat medium.

III. Why Even Do it? Are Chatbots Really Up to the Mark?

While chatbots are slowly penetrating in to various businesses, it would be unwise to believe that adopting this paradigm shift will be smooth. It may seem appealing to have an application which can scale one-on-one conversations, but scripting the right conversation for the appropriate customers is by no means a simple task and one must accept the inalienable truth that chatbots do fail to deliver seamless user experiences, from time to time.

The primary reasons are obvious, chatbots are still in the nascent stages of development and the expectations for the users may be to have a meaningful conversation with an actual human being rather than a bot. Moreover, the bots available these days are nowhere near to emulating real user behavior. To make things worse, many caught up with the chatbots hype, have offloaded umpteen number of poorly performing chatbots in the market. Many of these bots do not adhere to even the basic conversational interface standards. Also many may not have been properly tested either. Improper testing and validation might even spin up newer issues rather than providing a solution to an existing problem. For example, if it takes 3-4 steps via a website to place and order and your chatbot asks 15-20 questions to get to the same end point, the whole reason for adopting the chatbot becomes meaningless because it has overcomplicated the solution. Lastly, if the chatbot does not provide a fluid user experience, where one gets correct and timely responses, it would drastically curb your user base. So, testing for functional as well non-functional requirements is critical for delivering a successful bot adoption strategy.

IV. Loosely Coupled ChatBot Architecture

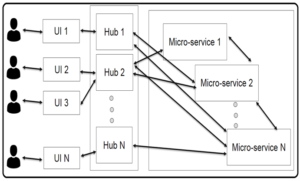

Before we understand how to test chatbots, it is critical that we understand how these chatbots are designed. The chatbot architecture generally consists of a user interface -UI (could be a visual or audio or both), through which the end user interacts with the bot. The UI is intelligent enough to convert the user inputs to the format easily understood by the backend systems. i.e. the UI is then linked to a Hub or rules/intelligence engine. This hub is in charge of performing two basic functionalities.

- Responding appropriately to the user and

- Maintaining the context of the conversation flow

The hub will then interface with the other upstream/downstream systems or 3rd party applications, to perform the necessary actions as requested by the user.

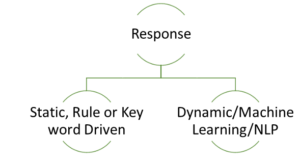

Chatbots can be classified in to 2 types based on the responses it can provide.

- If it only provides static responses based on some key word or search criteria

- If it generates response from machine learning

Validating the first is comparatively easier, because there are only a standard set of responses. But validating a ML driven Chabot requires manual intervention to validate the chatbots intelligence in responding.

Now, most chatbots are coupled along with other applications and perform some transactions. These transactions correspond to an interaction with a particular functionality of the coupled application and need not necessarily be just texting or having some random conversation.

The complexity, capability, stability and maturity of the intelligence/rules engine varies based on the provider. There are various providers like Amazon’s Alexa, Google’s assistant, Siri etc. and it can be hosted on a centralized cloud instance or locally within a particular environment behind a firewall.

The hub interacts either with a particular micro service which in turn interacts with other micro services to retrieve the appropriate response or the hub can directly interact with the internal micro services to respond to the user’s request.

V. Challenges and Focus Points

While there are multiple tools to validate responsiveness of the bot, measuring the scalability of the architecture, usability, accuracy or coherence, context sensitivity and relevance of the chat conversation etc. is a challenge.

Chatbots can be classified as Static or Dynamic based on the responses they provide.

If the response generated is a static response, or a key word driven response i.e. where the bot has to pick a response from its predefined list of responses, then validating the accuracy and context sensitivity is comparatively easier. But it is much more complex if it is a learning neural net or machine learning driven real time generated response.

Manual intervention to verify the Accuracy, Context sensitivity, recall or effective learning would be required if the chatbots are AI/NLP/Machine Learning driven. The reason being, only a human can verify if the AI is learning and emulating actions based on human interactions.

VI. Key Differences and Considerations

While would be naïve for us to compare and contrast chatbots and actual applications, on why either one is better than the other, because each serve a completely different purpose; it is necessary to understand how users perceive bots, to come up with an appropriate strategy to test it thoroughly. It is also to be noted that, while bots will not replace the actual applications, it would significantly reduce the interactions the use has with application’s front end/UI because in the future the user might choose to perform the same actions via the chatbot’s interface. For convenience let us think of it as a one stop shop to get all the products.

Below is a table, depicting the perception of users, when it comes to bots and applications.

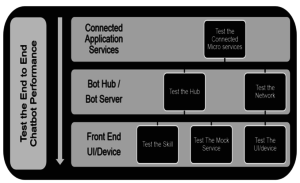

Here, we recommend a top down approach to performance testing chatbots.

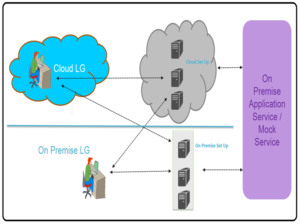

A. Test the Connected Application Services

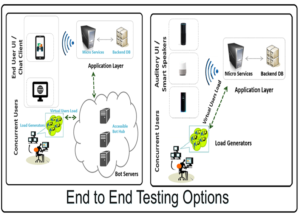

But before testing the bots itself, we have to make sure, the application which is being integrated with the bot works well, on its own without any issues. Since chatbots are being integrated with applications, the existing data volumes and transactions/throughput, with its predicted workload for the future will host good, to validate the application service. A standard set of performance tests, to ensure the application services work within the stipulated SLA, will ensure that our applications don’t pose to be a bottleneck, when later integrated with chatbots. A point to be noted here is, the requests coming in to the connected application environment could be from an external IP (outside of the firm’s firewall) or within the firm’s network itself, depending on the usage. A high level representation of the testing approach can be seen below.

A. Test the Bot Hub

The 2nd part is testing the bot hub or the server hosting the bot engine. The UI or Smart speakers like Amazon Echo or Google Home etc. have inbuilt capability to recognize voice, convert speech to text, format it in to the web services which are communicated to the hub for processing. But the actual processing of our request, identification of keywords or intents happens at the server end.

There are 2 probable ways to go about doing this.

- If we have access to the bot hub and the client themselves, host the intelligent chatbot server to process the requests.

This is usually applicable in cases where the hosted server caters to the request of UI based chat clients. We have complete control over the server that processes the requests coming in from the chat. Here, we can then load the hub with mock services and validate the performance of the hub based on the resources consumed by the hub to process the requests and provide a response, while continuously monitoring the server health, thereby ensuring that the hubs are not overloaded. (This however is a less probable flow, as most customers opt to use readily available chatbot engines like amazon’s Alexa or Google’s Now etc.)

- If one opts to use such readily available bot services, then accessing the hub of Amazon or Google or Microsoft, would most probably be restricted.

And since the communications happen over a secure network with encrypted messages being sent to and from application to server and vice versa, emulating load on these private servers would not be possible. So, for all intents and purposes, we must consider them as black boxes and go about validating the performance. Here while monitoring or tuning the bottlenecks is beyond our purview, we can perform a certain level of governance testing, where we can validate if the treatised SLAs, are being adhered to or not.

However, it is to be noted that, load testing the bot hub is easy, irrespective of the mode of communication, whether via visible chat client/browser or via a smart speaker because the requests which reaches the server are all generally as web services (XMLS/VXMLS/SOAP requests). Emulating multiple such chat sessions from across the globe is quite straight forward, with various load testing tools which are currently available in the market and support such web services load testing and network emulation.

The only difference in our approach; to validate the services, unlike traditional web services (especially when using smart speakers/assistants like Amazon’s Echo or Google Home) is that, these services referenced here (for testing) correspond to certain skills or intents. With natural language as input, the identification of intent or appropriate trigger keys is crucial to retrieve the necessary results. So, our inputs will comprise of a varied permutations and combination of queries, which retrieve similar results. So, it is critical we realize and understand the Many-to-One mapping that links the inputs to appropriate outputs and test the services with a varied range of inputs.

It is also to be noted that network emulation plays a major role assessing the performance of the system because various users, spread across the globe, using varying network bandwidths via various network providers, communicate with the server to get their tasks done. Then again, there are umpteen number of preset settings, readily available with most load generation tools, to emulating varying bandwidths and restrictions.

B. Test the UI (User Interface)/Front End

This is again tricky depending on whether the mode of communication is via a visual UI or purely via an auditory aid.

- If the UI is via a chat client, then there are a varied set of tools and timers which come associated with the UI, which can measure the time taken for the request to get processed and display an appropriate response to the user.

- If the UI is auditory, measuring the response times at the UI can only be done through manual intervention and will be subject to human induced errors. The only option is approximately measure the end user response times when smart speakers are concerned, is by using a stop watch – which roughly tells how much time it takes at the UI to get the response. So, from the response times observed, one can negate the time taken at the at each of the other layers (if resource monitoring is in place), to get the approximate time spent at the UI, to process the requests, convert it to the suitable formats accepted by the hub and then to the application layer and back again.

C. End to End Testing – Single End User with Overloaded Servers

This can be approached in 2 way, depending on whether or not we have access to the bot hub. If we do have access to the bot hub, we may load test the bot hub, which in turns overloads the application back end and while this process is in progress, we can validate front end and analyze our results, to see how the load on the back end affects the response times at the front end. But if the use case here is to use a smart speaker and a third party hub, to which we do not have access, we can validate the response times at the front end, while we over load the application services. At this junction, validating the performance of the bot hub will be beyond our scope and our analysis will be restricted to only a validation against adherence to SLAs.

VII. Reuse Existing/Available Materials and Building Over it

So, the tools or platforms we leverage to test chatbots do not vary by a great degree but it’s just that, our approach needs to be restructured to meet the complexities/restrictions brought about by changing technologies. A generic set of tools and utilities for performance testing chatbots are listed below. (This list is not all encompassing and include many other tools readily available)

The above set of tools and utilities help measure the general set of NFRs that one checks for performance. But to accurately assess the performance of a chatbot, there are certain chatbot specific NFRs which can only be validated through manual intervention. The reason being, analyzing and validating the success criteria for these NFRs, require a certain degree of cognizance, which our validation tools do not possess. NFRs such as Intent Retrieval (is the bot able to properly understand the intent or questions one poses to it), Accuracy (how many responses are correct), Consistency (is the system able to give correct responses consistently or if it is a one off event), Decision Steps (how many follow up questions or interactions are required before it arrives at the desired response), comparative productivity improvement (how much is the actual time saved by the bot when compared to manually using the application to get our results), Recall and Personalization (is the bot able to recall previous requests and able to provide customized responses to individuals) etc. (Kozhaya, 2016) These require human intervention at the moment and cannot be automated with our current tool set. But validating these NFRs in isolation as well as cases where the connected system is under load, would give an approximate assessment of success or failure of Chatbot’s usability and ability to provide a positive user experience.

VIII. Don’t Miss the Chatbots Train!

2018 is predicted to be the year of conversational chatbots. Bots are expected to dominate in areas such as retail, customer services, personalized or local services, social connections/interactions or even conversational companions.

The use cases are infinite. Be it finding if the product you are looking for is readily available or out of stock at your nearest retail outlet, without even having to browse or touch your phone. Or talking to a chatbot rather than calling to a 1-800 number with a mundane set of prerecorded instructions, or hailing nearest available cab/Uber to go to a movie which you booked for you and your friends using the same smart assistant.

It is evident even from the current rate of adoption that these chatbots can do a lot of activities right out of the box. So, if business must excel after adopting chatbots, business must also ensure that the user experience that they provide to their customers must remain smooth and provide contiguous interactions with very minimal or zero glitches, to ensure customer satisfaction and increase retentivity. So, we need to be prepared and make sure our bots are prime time ready by appropriately testing our bots and applications to remain relevant.

IX. References

Debecker, A. (2017, August 23). 2018 Chatbot Statistics – All The Data You Need. Retrieved from ubisend.com: https://blog.ubisend.com/optimise-chatbots/chatbot-statistics

Kozhaya, J. (2016, December 19). chatbot-cognitive-performance-metrics-accuracy-precision-recall-confusion-matrix/. Retrieved from https://developer.ibm.com/dwblog: https://developer.ibm.com/dwblog/2016/chatbot-cognitive-performance-metrics-accuracy-precision-recall-confusion-matrix/